3. Cracking problem with X- FEM#

3.1. General problem#

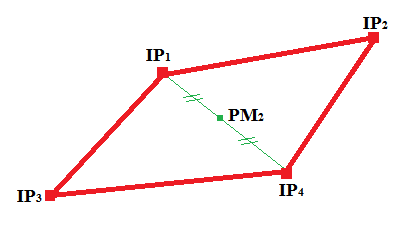

In this part, we recall the equations of the general problem of a cracked structure. We consider a crack \({\Gamma }_{c}\) in a domain \(\Omega \in {\Re }^{3}\) delimited by \(\partial \Omega\) of external normal \({n}_{\mathrm{ext}}\). The lips of the crack are noted \({\Gamma }^{1}\) and \({\Gamma }^{2}\) of external normals \({n}^{1}\) and \({n}^{2}\). The constraint and displacement fields are respectively noted \(\sigma\) and \(u\).

Quasi-static loading is imposed on the structure via a density of volume forces \(f\), a density of surface forces \(t\) on \({\Gamma }_{t}\) and a density of surface forces \(g\) on the lips. The solid is embedded on \({\Gamma }_{u}\).

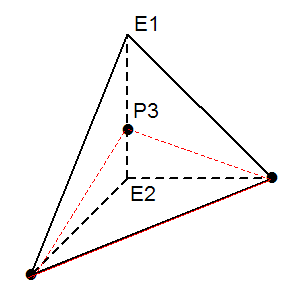

Figure 3.1-1 : General problem notes

The strong form of equilibrium equations and boundary conditions is written as:

\(\begin{array}{}\nabla \cdot \sigma =f\text{dans}\Omega \\ \sigma \cdot {n}_{\mathrm{ext}}=t\text{sur}{\Gamma }_{t}\\ \sigma \cdot {n}^{1}=g\text{sur}{\Gamma }^{1}\\ \sigma \cdot {n}^{2}=g\text{sur}{\Gamma }^{2}\\ u=0\text{sur}{\Gamma }_{u}\end{array}\) eq 3.1-1

We place ourselves in the framework of small deformations and small displacements, for which the deformations-displacements relationship is written:

\(\varepsilon =\varepsilon (u)={\nabla }_{s}u\) eq 3.1-2

where \({\nabla }_{s}\) is the symmetric part of the gradient and \(\varepsilon\) is the strain tensor.

We consider a linear elastic material [1] . The law of behavior of solid \(\Omega\) is written as:

\(\sigma \mathrm{=}C\mathrm{:}\varepsilon \text{dans}\Omega\) eq 3.1-3

where \(C\) is the Hooke tensor.

The principle of virtual work is written as:

\({\int }_{\Omega }\sigma (u):\epsilon (v)d\Omega ={\int }_{\Omega }f\cdot \mathrm{vd}\Omega +{\int }_{{\Gamma }_{t}}t\cdot \mathrm{vd\Gamma }+{\int }_{{\Gamma }_{c}}g\cdot \mathrm{vd\Gamma }\forall v\in {H}_{0}^{1}(\Omega )\) eq 3.1-4

where \({H}_{0}^{1}(O)\) is the Sobolev space of functions whose derivative has an integrable square, cancelling out on \(\partial \mathrm{\Omega }\).

3.2. Enrichment of the displacement approximation#

The main idea is to enrich the base of interpolation functions by partitioning the unit [bib 23]. We recall the classical finite element approximation:

\({u}^{h}(x)=\sum _{i\in {N}_{n}(x)}{a}_{i}{\phi }_{i}(x)\)

where \({a}_{i}\) are the degrees of freedom of movement at node i and \({\phi }_{i}\) are the shape functions associated with node i. \({N}_{n}(x)\) is the set of nodes whose support contains the point \(x\). We equate the support of a node i to the support of the shape functions associated with this node, that is to say to the set of points \(x\) such as \({\phi }_{i}(x)\ne 0\).

The enriched approximation is written as:

\({u}^{h}(x)=\sum _{i\in {N}_{n}(x)}{a}_{i}{\mathrm{\varphi }}_{i}(x)+\sum _{j\in {N}_{n}(x)\cap K}{b}_{j}{\mathrm{\varphi }}_{j}(x){H}_{j}\left(\mathit{lsn}(x)\right)+\sum _{k\in {N}_{n}(x)\cap L}\sum _{\mathrm{\alpha }=1}^{4}{c}_{k}^{\mathrm{\alpha }}{\mathrm{\varphi }}_{k}(x){F}^{\mathrm{\alpha }}\left(\mathit{lsn}(x),\mathit{lst}(x)\right)\)

This expression is composed of 3 terms. The 1st term is the classical term continuous. The 2nd and 3rd terms are enriched terms. Being at the heart of the X- FEM method, these terms are explained in the following paragraphs.

3.2.1. Enrichment with a domain selection function (2nd term)#

Assume that interface \({\Gamma }_{c}\) partitions the domain such as \(\mathrm{\Omega }={\mathrm{\Omega }}_{\text{+}}\cup {\mathrm{\Omega }}_{\text{-}}\). If \({\Gamma }_{c}\) is a crack, the \(\mathrm{\Omega }\) domain is partitioned in the same way by virtually extending \({\Gamma }_{c}\).

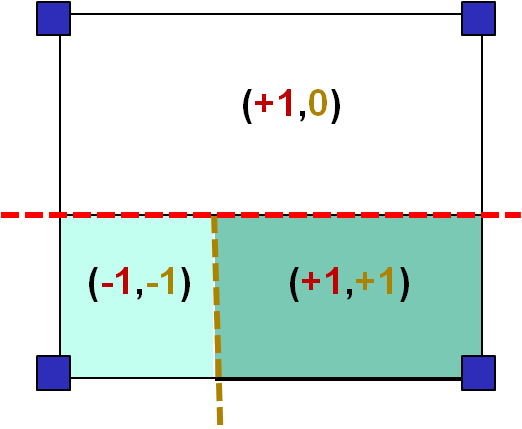

In order to represent the jump in movement through \({\Gamma }_{c}\), we introduce the domain selection function or domain characteristic function \({H}_{j}\left(x\right)\) [bib 76] defined by:

\(\mathit{Si}\phantom{\rule{2em}{0ex}}{x}_{j}\in {\mathrm{\Omega }}_{\text{+}},\phantom{\rule{2em}{0ex}}{H}_{j}(x)=\{\begin{array}{c}\phantom{\rule{2em}{0ex}}0\phantom{\rule{2em}{0ex}}\text{si}\phantom{\rule{0.5em}{0ex}}x\in {\mathrm{\Omega }}_{\text{+}}\\ -2\phantom{\rule{2em}{0ex}}\text{si}\phantom{\rule{0.5em}{0ex}}x\notin {\mathrm{\Omega }}_{\text{+}}\end{array}\)

\(\mathit{Si}\phantom{\rule{2em}{0ex}}{x}_{j}\in {\mathrm{\Omega }}_{\text{-}},\phantom{\rule{2em}{0ex}}{H}_{j}(x)=\{\begin{array}{c}\phantom{\rule{2em}{0ex}}0\phantom{\rule{2em}{0ex}}\text{si}\phantom{\rule{0.5em}{0ex}}x\in {\mathrm{\Omega }}_{\text{-}}\\ +2\phantom{\rule{2em}{0ex}}\text{si}\phantom{\rule{0.5em}{0ex}}x\notin {\mathrm{\Omega }}_{\text{-}}\end{array}\)

Using the normal level set, the quantity \({H}_{j}\left(\text{lsn}\left(x\right)\right)\) is \(0\) if the point \(x\) and the node \({x}_{j}\) are on the same side of the crack and \(\pm 2\) otherwise. The coefficient « 2 » is introduced to have a simpler way of writing the average jump of movement along the interface.

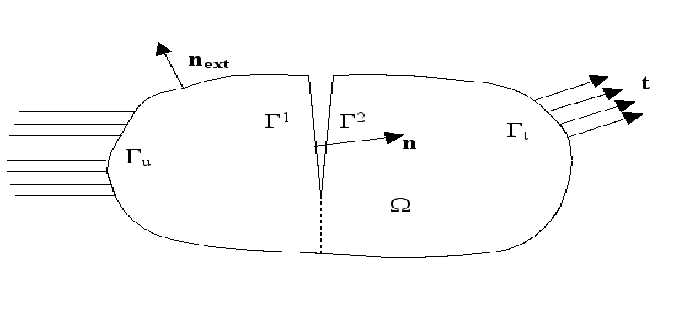

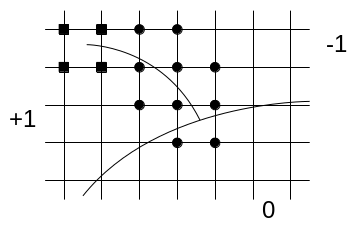

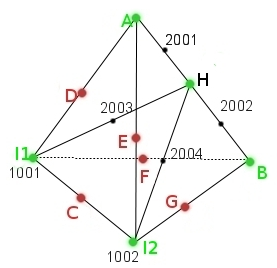

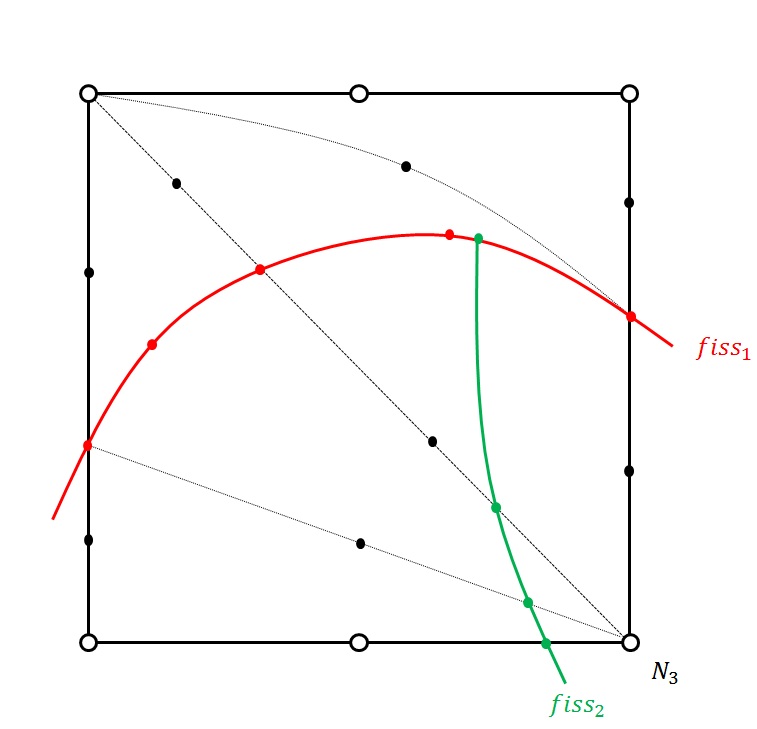

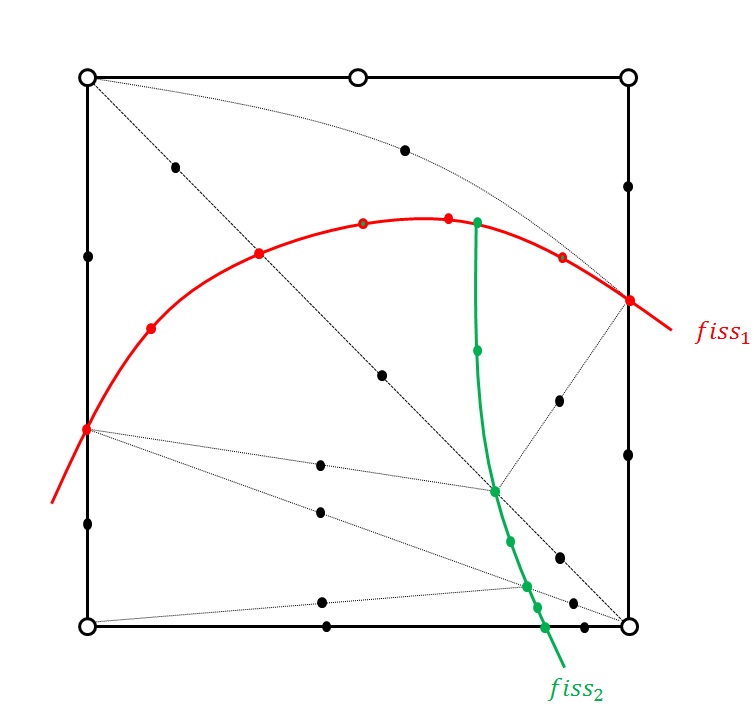

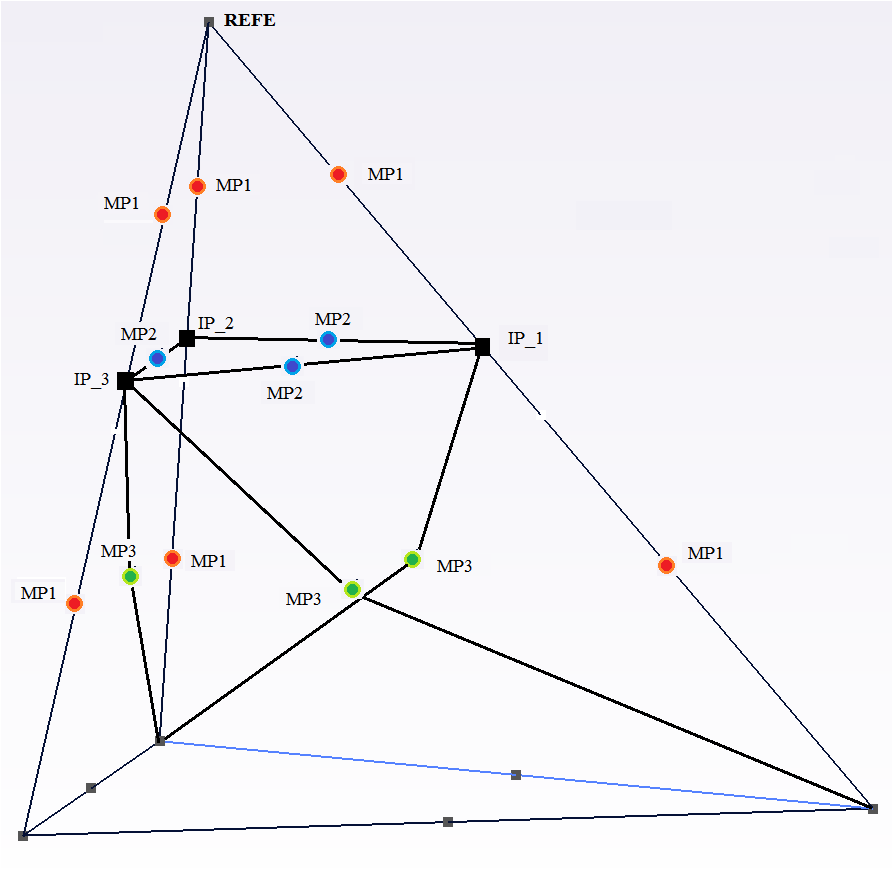

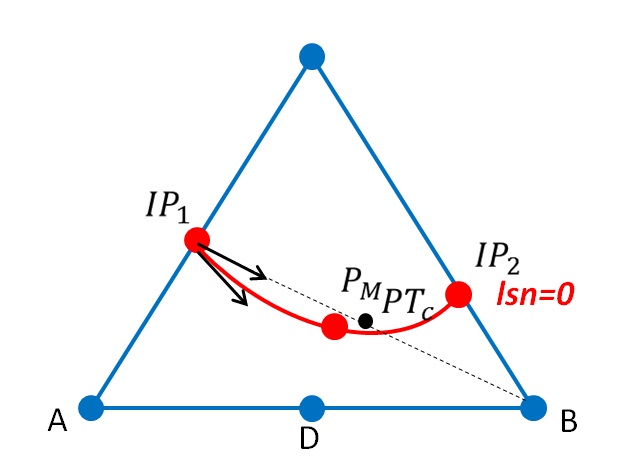

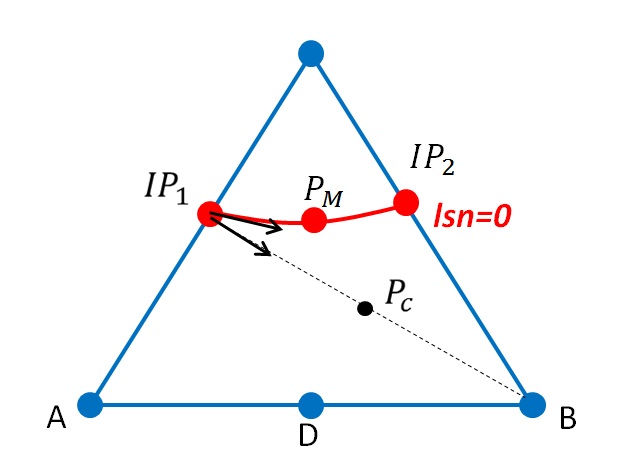

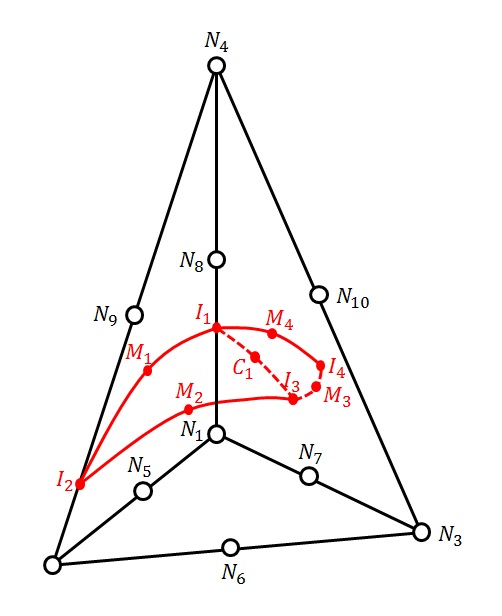

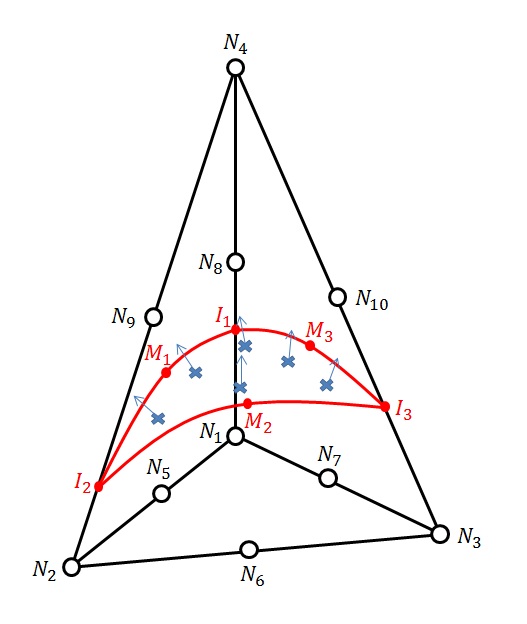

The \({b}_{j}\) are the enriched degrees of freedom. \(K\) is the set of knots whose support is entirely cut by the crack (knots represented by a circle on Figure 3.2.1-1).

Note: for abuse of language, domain selection functions will also be called « Heaviside » functions. But it will be necessary to refer to the definition above, to represent the approximation of the discontinuity of the field of displacement.

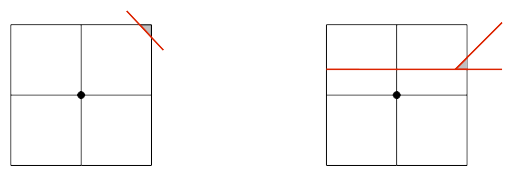

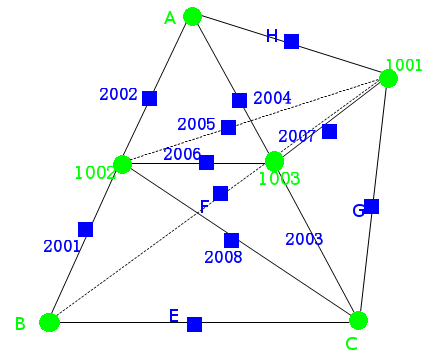

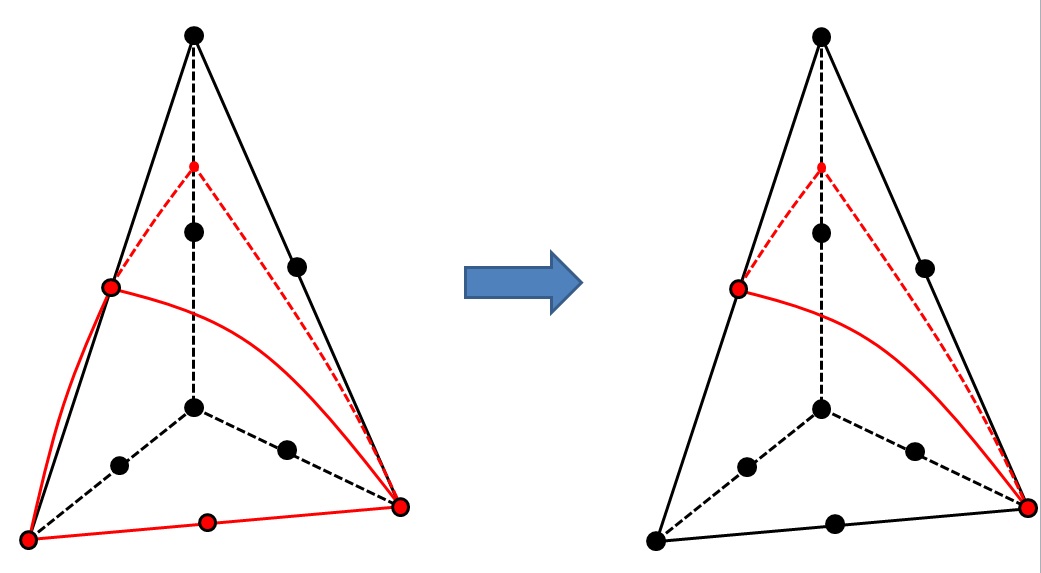

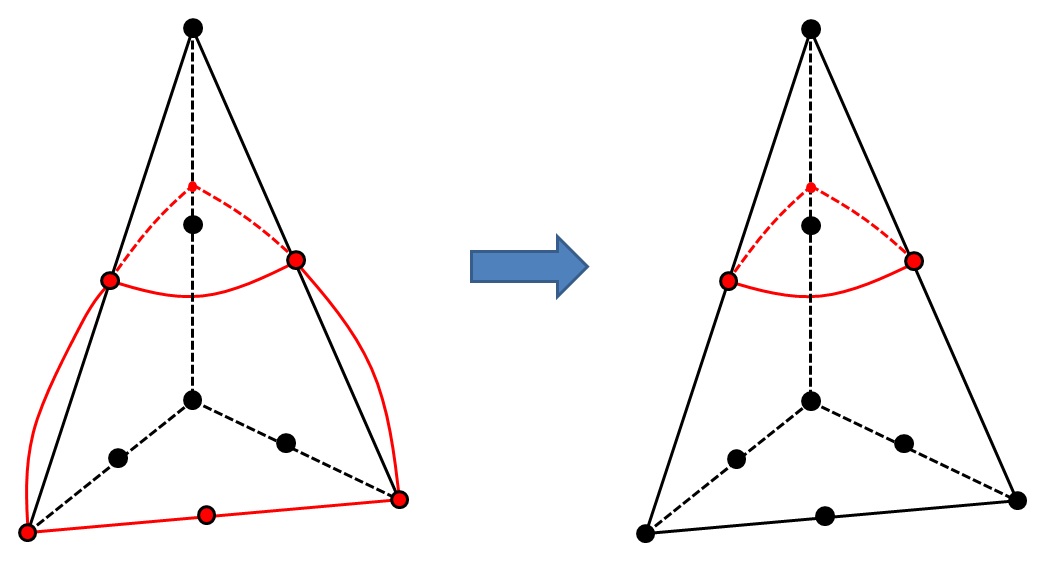

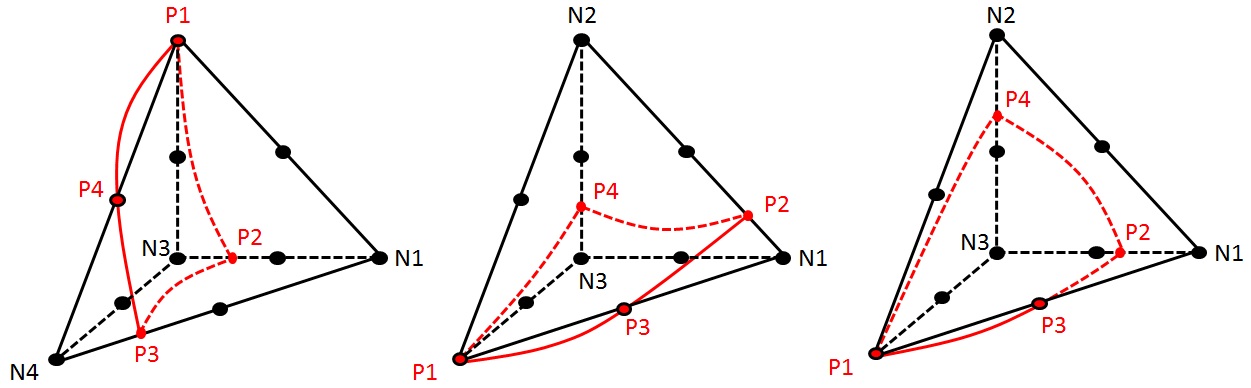

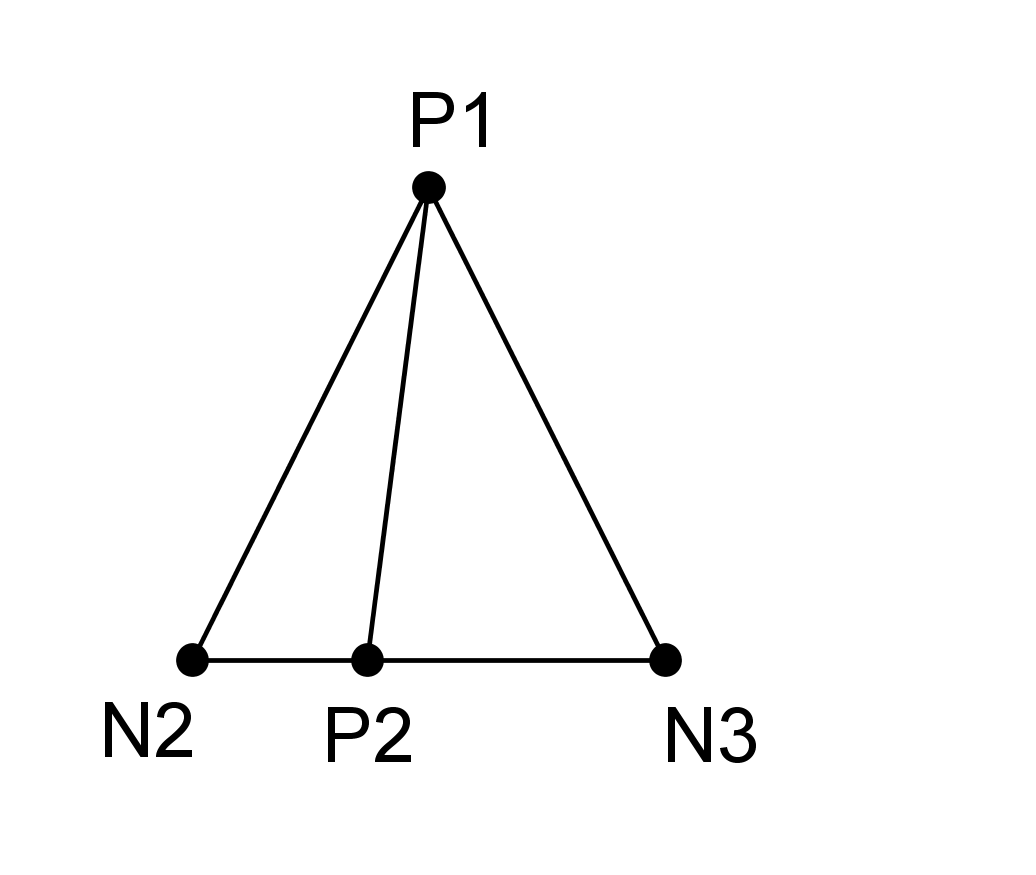

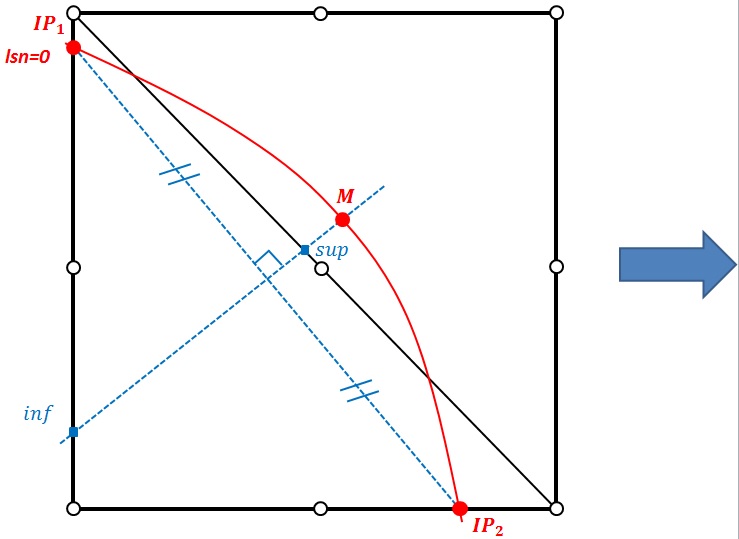

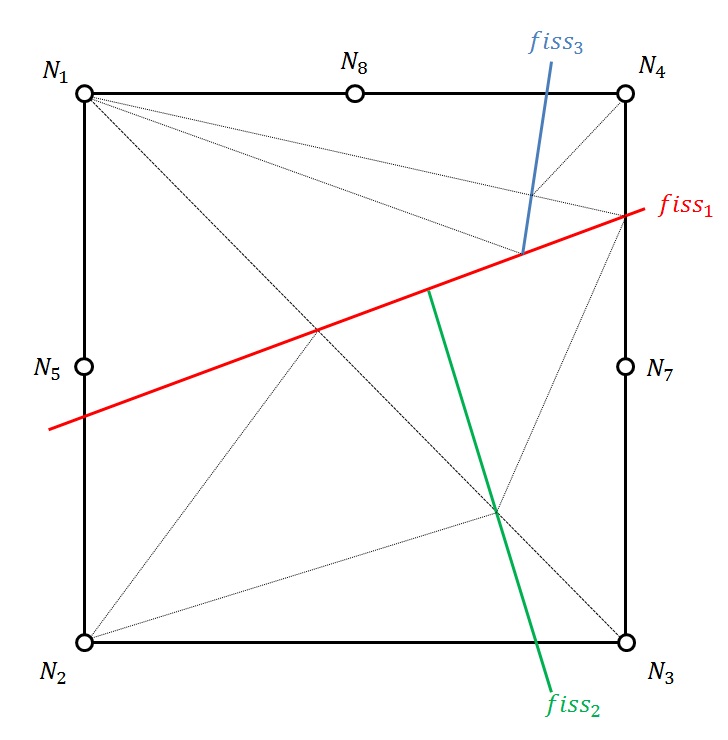

Figure 3.2.1-1: On the left, the « round » nodes are enriched by the Heaviside function and the « square » nodes by the singular functions (topological enrichment). On the right, the « square » nodes are enriched by the singular functions (geometric enrichment).

3.2.2. Enrichment with a domain selection function for a crack branch#

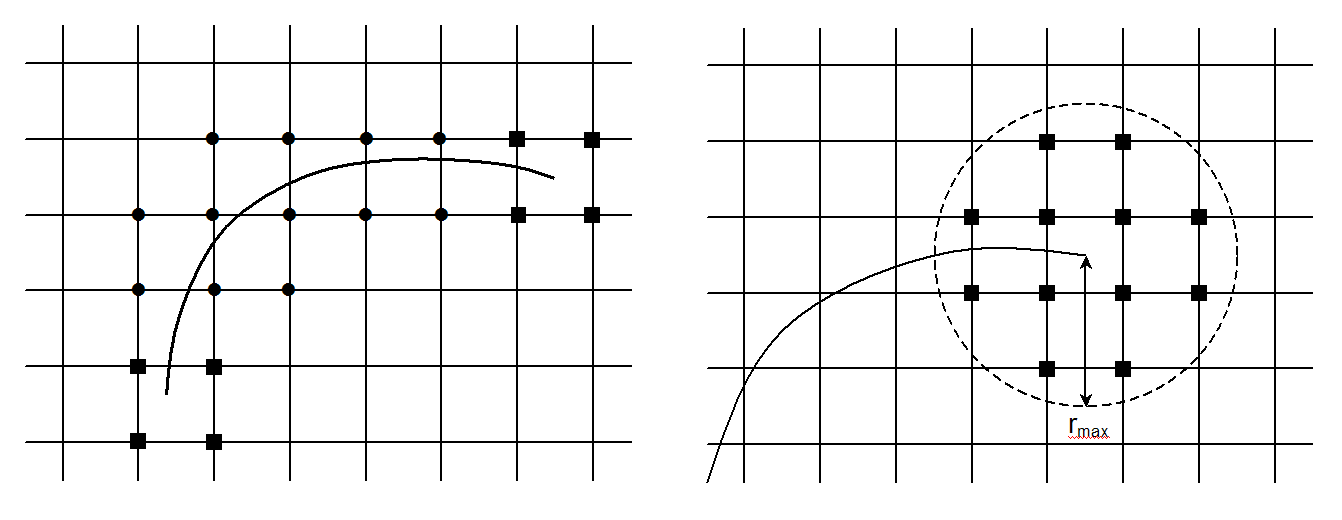

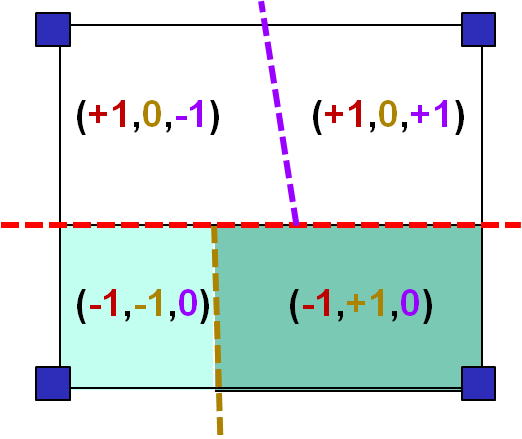

Assume a branch of two discontinuities, which partitions space into three domains such as \(\mathrm{\Omega }={\mathrm{\Omega }}_{\text{1}}\cup {\mathrm{\Omega }}_{\text{2}}\cup {\mathrm{\Omega }}_{\text{3}}\). If it is a branch of two cracks, domain \(\mathrm{\Omega }\) is partitioned in the same way by virtually extending the three crack branches.

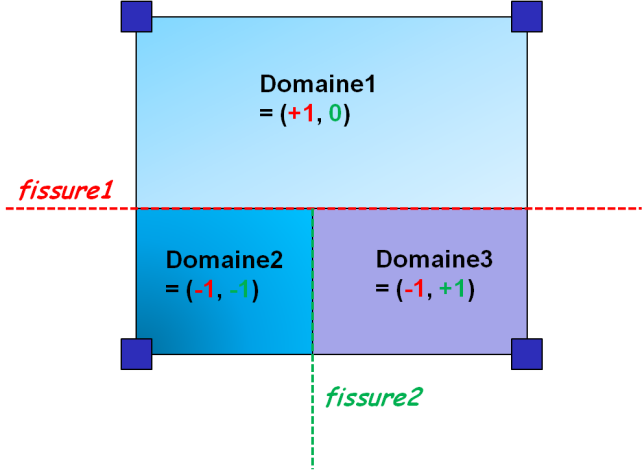

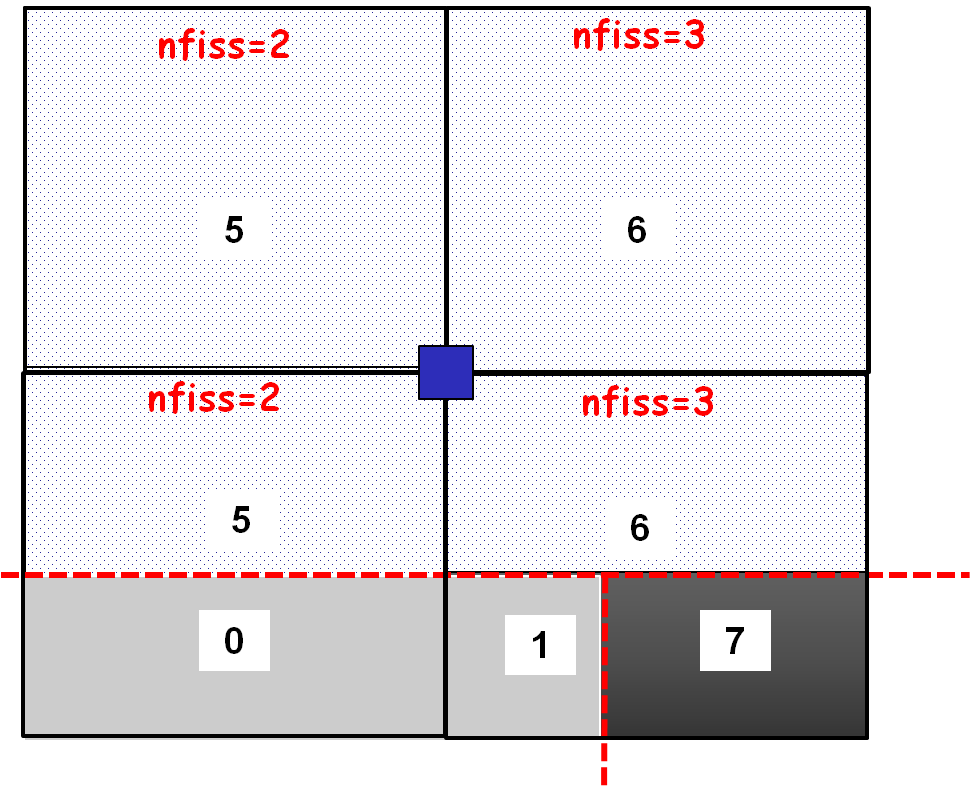

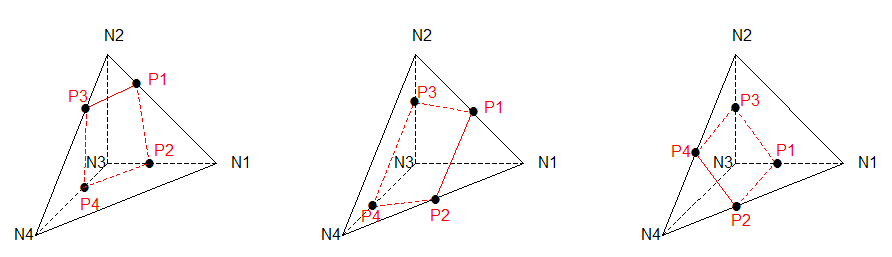

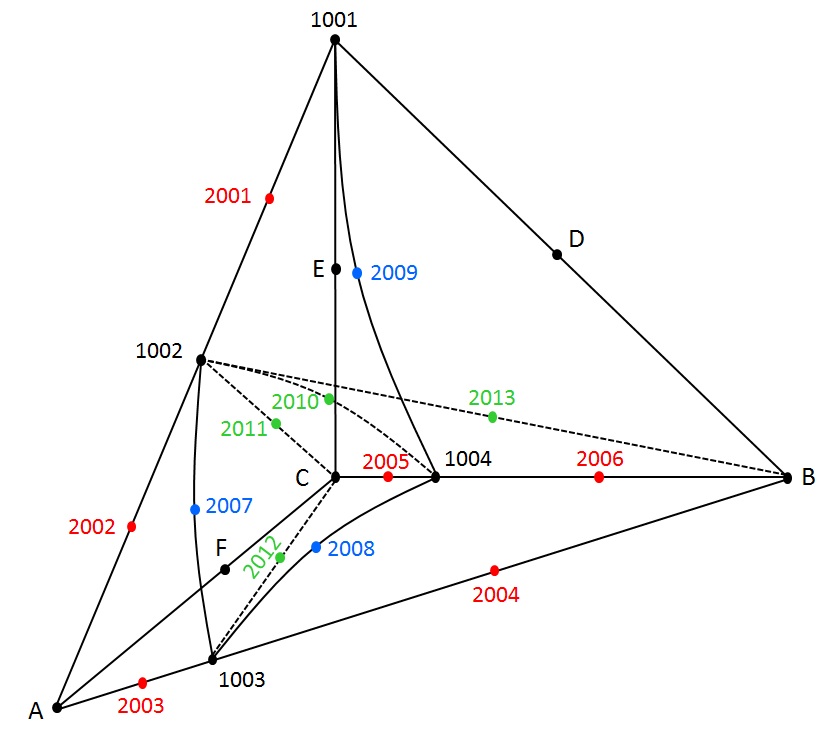

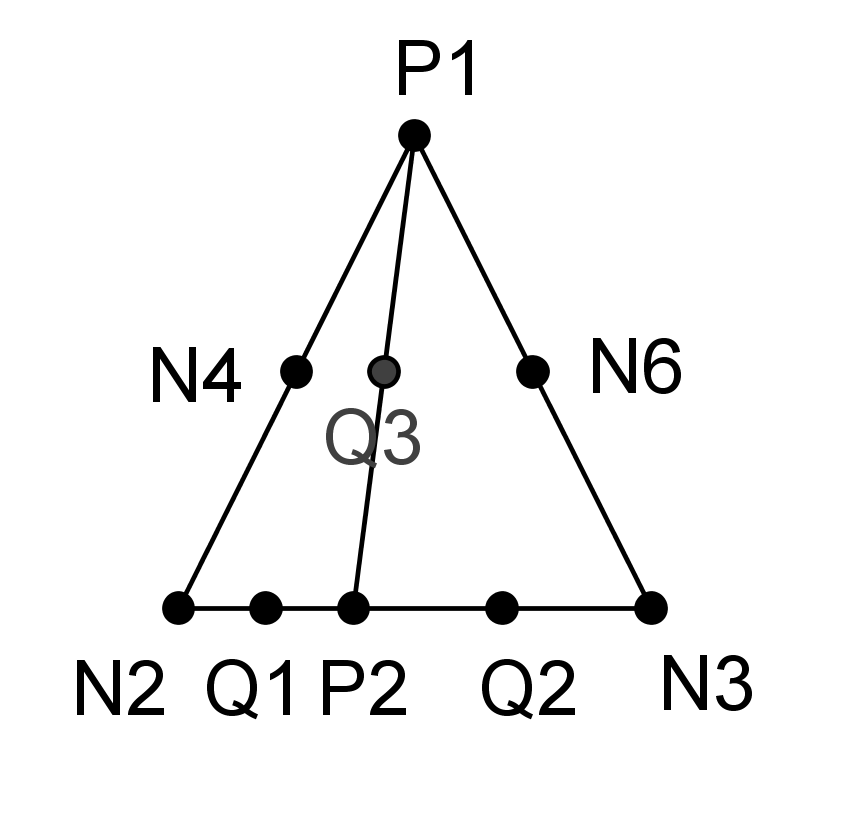

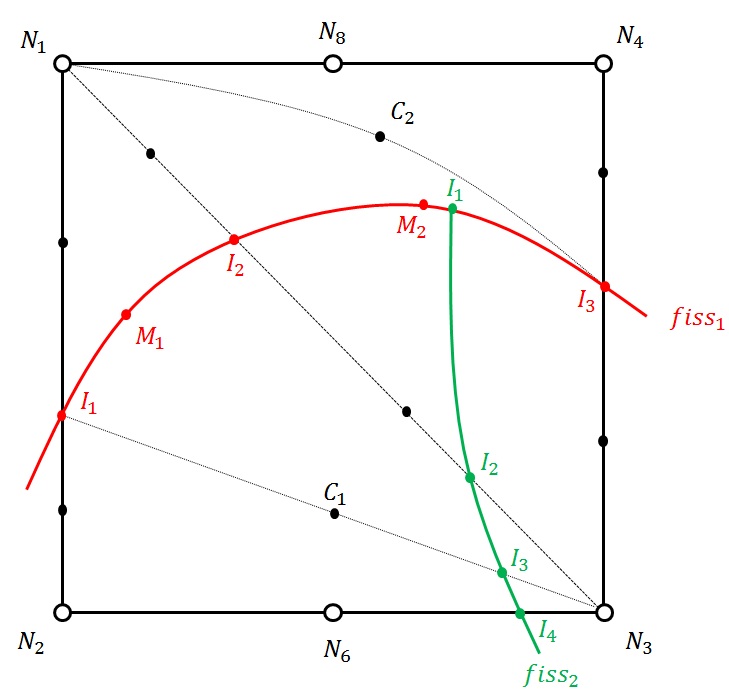

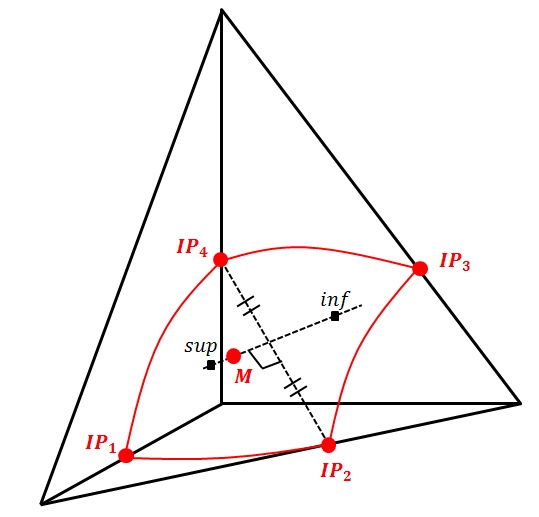

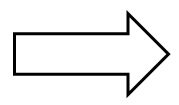

Figure 3.2.2-1partitioning of the domain by a crack junction

For a simple connection, the generalization of domain selection functions leads to the following writing:

\(\begin{array}{c}{u}^{h}(x)=\sum _{i\in {N}_{n}(x)}{a}_{i}{\mathrm{\varphi }}_{i}(x)+\sum _{j\in K\cap {\mathrm{\Omega }}_{\text{1}}}{b}_{j\mathrm{,1}}{\mathrm{\varphi }}_{j}(x){\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{2}}}+{b}_{j\mathrm{,2}}{\mathrm{\varphi }}_{j}(x){\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{3}}}\\ +\sum _{j\in K\cap {\mathrm{\Omega }}_{\text{2}}}{b}_{j\mathrm{,1}}{\mathrm{\varphi }}_{j}(x){\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{1}}}+{b}_{j\mathrm{,2}}{\mathrm{\varphi }}_{j}(x){\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{3}}}\\ +\sum _{j\in K\cap {\mathrm{\Omega }}_{\text{3}}}{b}_{j\mathrm{,1}}{\mathrm{\varphi }}_{j}(x){\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{1}}}+{b}_{j\mathrm{,2}}{\mathrm{\varphi }}_{j}(x){\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{2}}}\end{array}\)

Where \({\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{i}}}\) are domain selection functions defined by:

\({\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{i}}}(x)=\{\begin{array}{c}\phantom{\rule{2em}{0ex}}2\phantom{\rule{2em}{0ex}}\text{si}\phantom{\rule{0.5em}{0ex}}x\in {\mathrm{\Omega }}_{\text{i}}\\ \phantom{\rule{2em}{0ex}}0\phantom{\rule{2em}{0ex}}\text{si}\phantom{\rule{0.5em}{0ex}}x\notin {\mathrm{\Omega }}_{\text{i}}\end{array}\)

However, the topological description of the three related domains (Ω1, Ω2, and Ω3) is not trivial, considering the only information provided by the level-sets. Levet-sets make it possible to represent a change in scalar sign through a discontinuity. Whereas we want to represent the partitioning of space in the vicinity of the discontinuity.

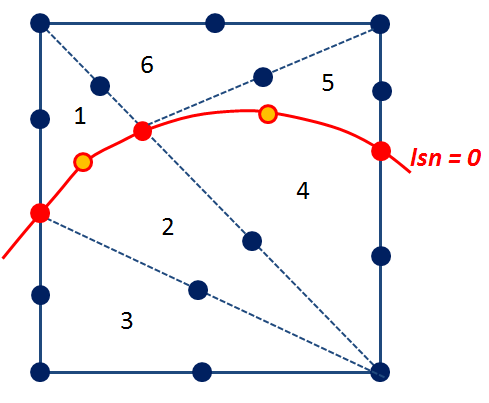

From an « elementary » point of view, we note that the sign field of level-sets, read in vector form, corresponds approximately to the partitioning we want to achieve. This partitioning, thanks to the vectorization of scalar level-sets, then makes it possible to reuse the Code_Aster data structures advantageously. However, elementary partitioning alone is insufficient, to represent the « ddl domain » (χ Ωφi).

Note:

In fact, the « ddl domain » corresponds to the collection of partitions of the domain Ω across the entire support of node i. On the support of each node, it is therefore essential to concatenate the elementary partitions corresponding to a given « ddl domain » . This operation is not trivial since sign fields evolve from one element to another (as scalar sign fields are not extended over all elements, to locate the definition of a crack on a few elements of interest, also called « near band » of enrichment). An algorithm dedicated to the concatenation of elementary partitioning has been developed to solve this fundamental difficulty.

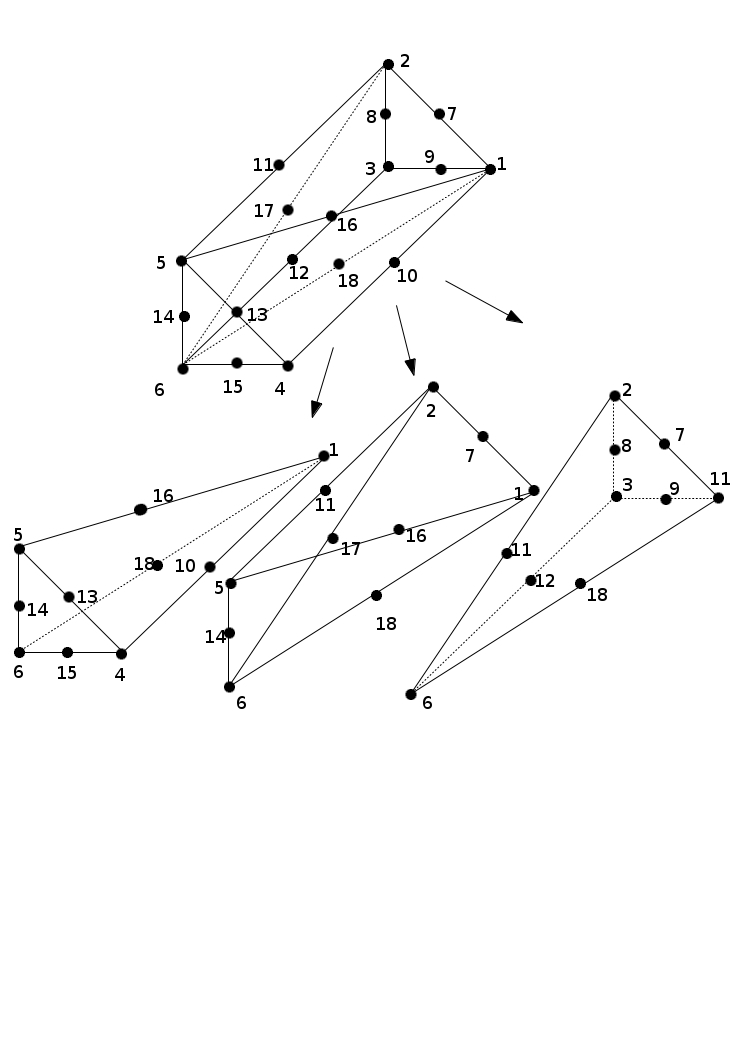

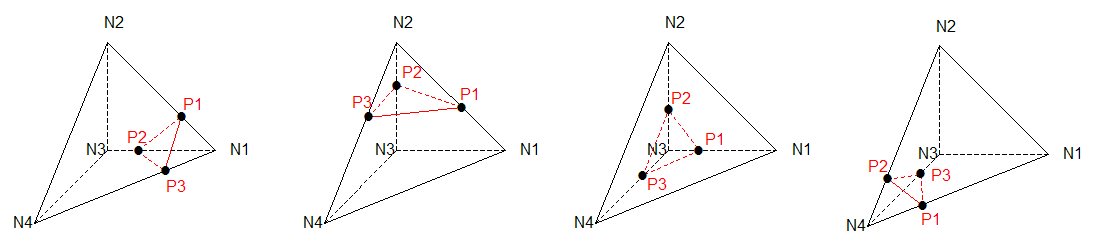

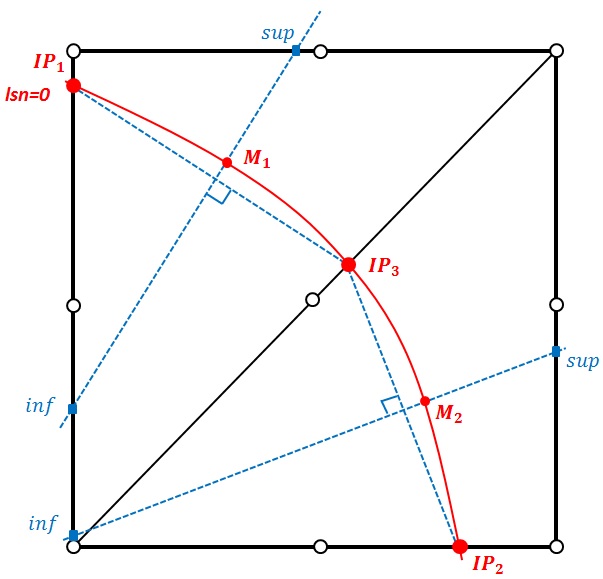

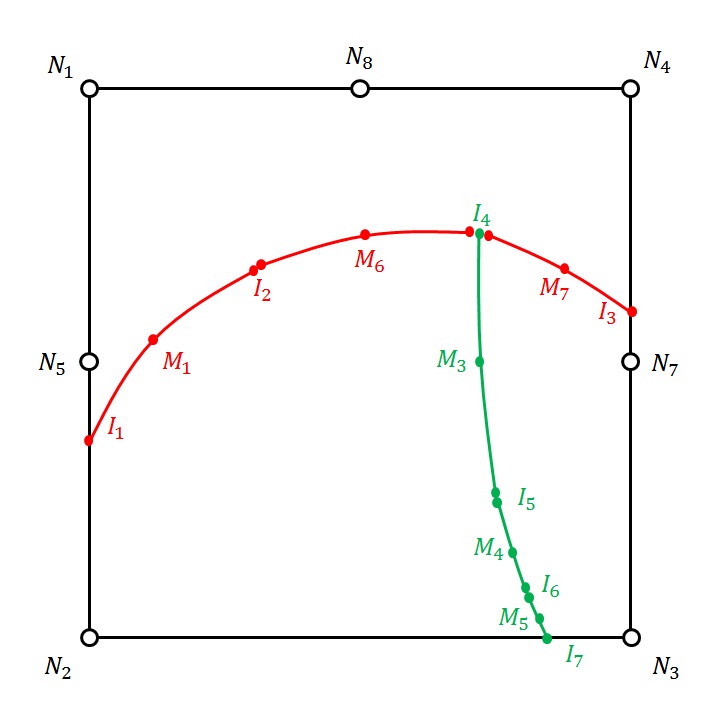

Figure 3.2.2-2: partitioning a multi-cracked element using the « vectorized » sign field. For each domain, the first scalar component of the sign vector corresponds to the characterization of the discontinuity of crack No. 1 and the second scalar component of the sign vector corresponds to the discontinuity of crack No. 2.

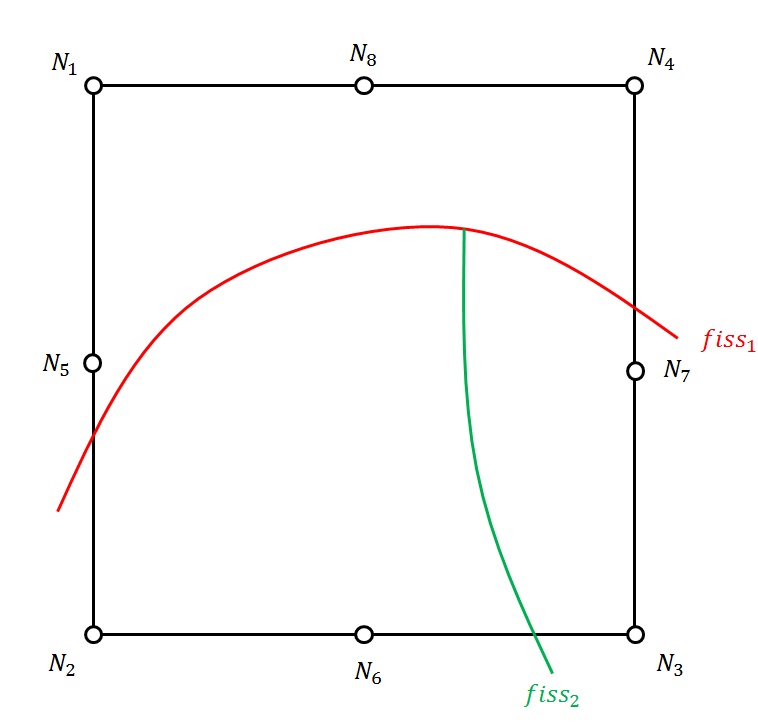

Construction of sign functions or junctions:

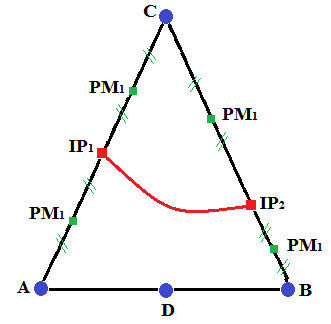

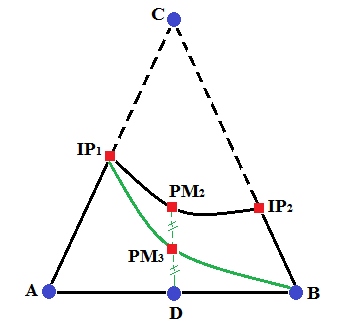

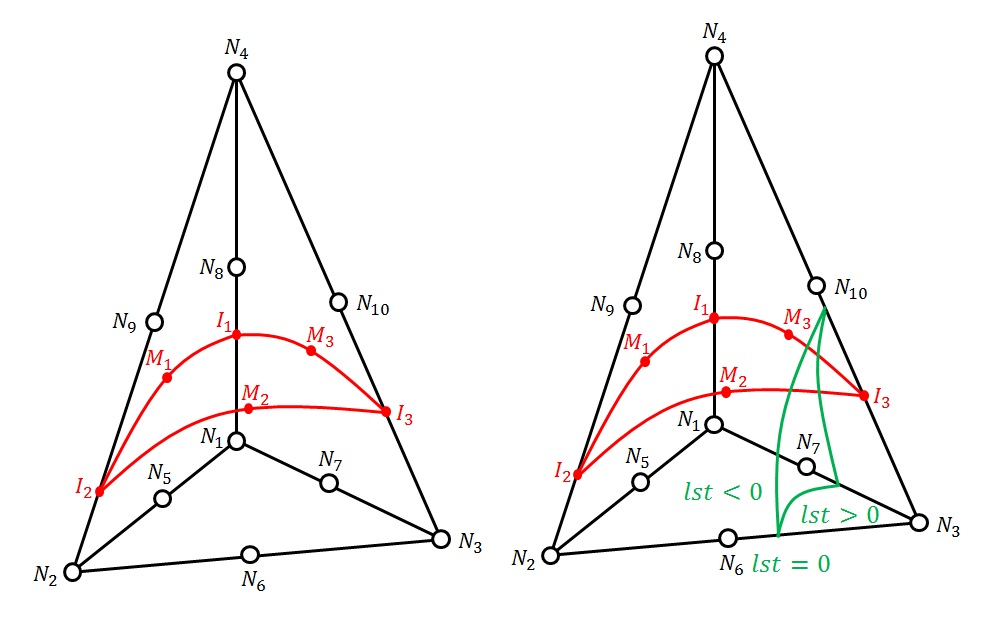

Recall the construction of Heaviside sign functions or junction-type functions [bib 70]. This is a Heaviside function that is « truncated » at the branch level. The enriched nodes are shown on the.

Figure 3.2.2-3: Enrichment for the second crack. Round nodes are enriched by the junction function, which is equal to +1, -1, 0.

The value of this function depends on the normal set levels of the 2 cracks. We consider the sign of the normal level set of crack 1, on the side where crack 2 is defined \(\mathrm{sign}({\mathrm{lsn}}_{1}({\mathrm{fiss}}_{2}))\) (in practice, we look at the sign of a point belonging to the domain of \({\mathrm{lsn}}_{1}\) in which crack 2 is located, cf. operand JONCTION from doc [U4.82.08]). We then have the junction enrichment function for crack 2 which is written as:

\({J}_{2}(x)=\{\begin{array}{}H({\mathrm{lsn}}_{2}(x))\text{si}\mathrm{sign}({\mathrm{lsn}}_{1}({\mathrm{fiss}}_{2}))H({\mathrm{lsn}}_{1}(x))\ge 0\\ 0\text{sinon}\end{array}\)

It is possible to connect a third crack to the first one (for example to model an intersection):

\({J}_{3}(x)=\{\begin{array}{}H({\mathrm{lsn}}_{3}(x))\text{si}\mathrm{sign}({\mathrm{lsn}}_{1}({\mathrm{fiss}}_{3}))H({\mathrm{lsn}}_{1}(x))\ge 0\\ 0\text{sinon}\end{array}\)

If we want to connect the third crack to the second, we must take into account the domain of definition of the first, we will therefore have:

\({J}_{3}(x)=\{\begin{array}{}H({\mathrm{lsn}}_{3}(x))\text{si}\forall i\in [\mathrm{1,2}],\mathrm{sign}({\mathrm{lsn}}_{i}({\mathrm{fiss}}_{3}))H({\mathrm{lsn}}_{i}(x))\ge 0\\ 0\text{sinon}\end{array}\)

If we generalize the approach to a crack \(N\) that connects to the cracks in a set \(K\), we then define the set of cracks \(P\), which contains both all the cracks in \(K\) and all the cracks into which the cracks in \(K\) possibly branch. The junction function is then written in general terms:

\({J}_{N}(x)=\{\begin{array}{}H({\mathrm{lsn}}_{N}(x))\text{si}\forall i\in P,\mathrm{sign}({\mathrm{lsn}}_{i}({\mathrm{fiss}}_{n}))H({\mathrm{lsn}}_{i}(x))\ge 0\\ 0\text{sinon}\end{array}\)

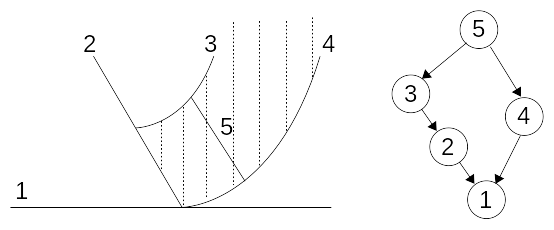

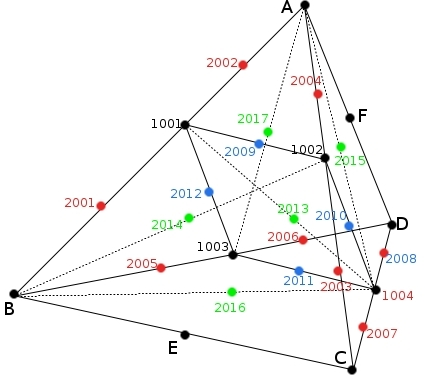

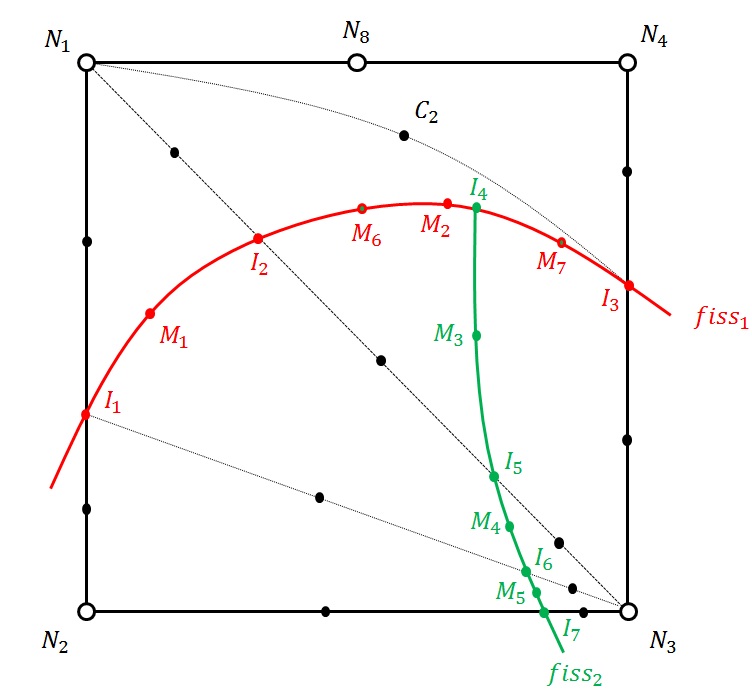

An example of configuration is shown. On this example a crack connectivity tree is constructed. We deduce from this tree that for \(N=3\), we have \(K=2\) and \(P=[\mathrm{1,2}]\). Similarly for \(N=5\), \(K=[\mathrm{3,4}]\) and \(P=[\mathrm{1,2}\mathrm{,3}\mathrm{,4}]\) are deduced. So we have \({J}_{5}(x)=H({\mathrm{lsn}}_{5}(x))\) on the shaded domain of the figure, and zero elsewhere.

Figure 3.2.2-4: Network of cracks on the left, hierarchy tree on the right, the shaded area corresponds to the domain where the enrichment function of crack 5 is not zero.

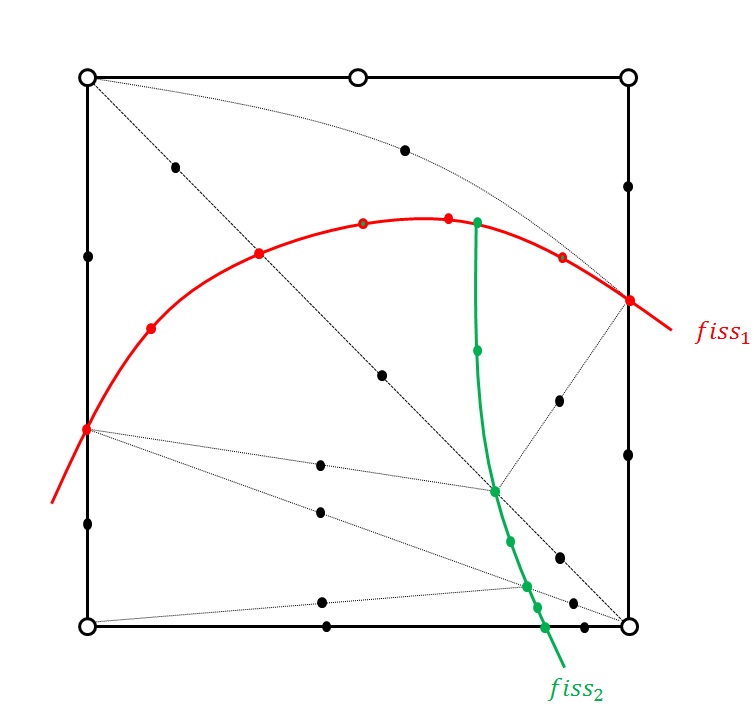

Exploitation of sign functions for the assembly of ddls domains:

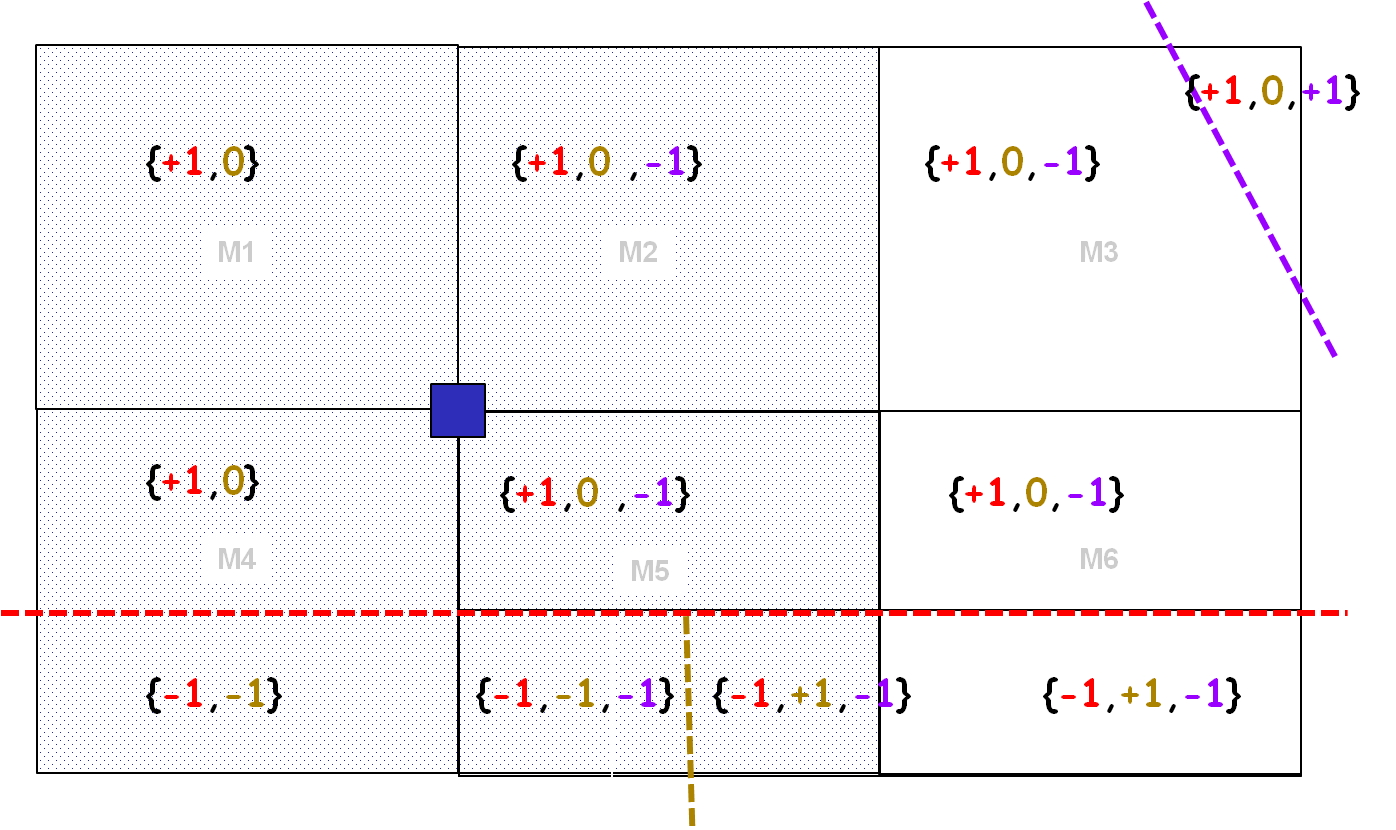

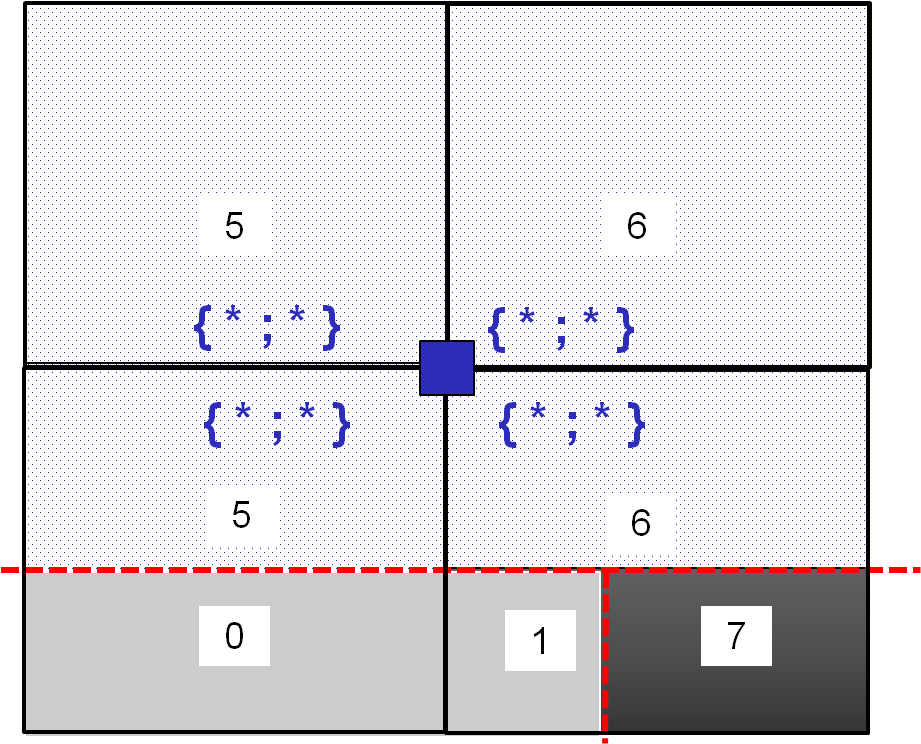

To build the ddls domains defined in the previous paragraph, we propose to reuse the information from the Heaviside sign field to define elementary partitioning. Since the algorithm is much too complex to explain in terms of data structures, we give a graphical translation of the algorithm. For example, here are the concatenated Heaviside sign fields that we want to use to build elementary partitioning:

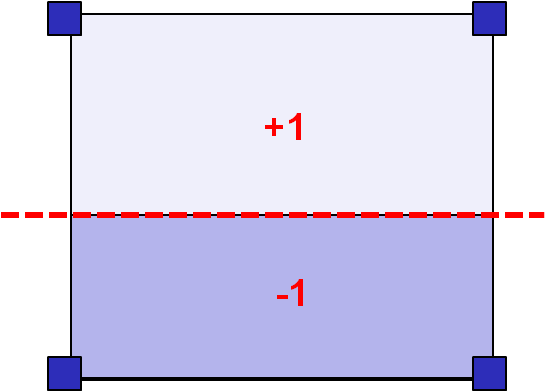

Simple crack |

Simple junction |

Multiple junction |

|

|

|

Since the case of a mono-crack is quite easy, we will focus in detail on the case of a simple junction. In the following graphical explanation, we propose to change the perspective from the basic description suggested above. The algorithm will be described from the point of view of node support, which is more suitable for describing nodal enrichment functions.

From the point of view of the node, the information on the sign fields is richer than the idyllic case described above. In fact, the node sees information on nearby cracks, that is to say, located in the first and second bands of elements adjacent to the support of the node. In the node support, there is therefore a crack status (cf. [D4.10.02]) to discriminate cracks intersecting the node support and nearby cracks that do not intersect the node support.

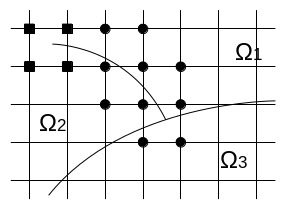

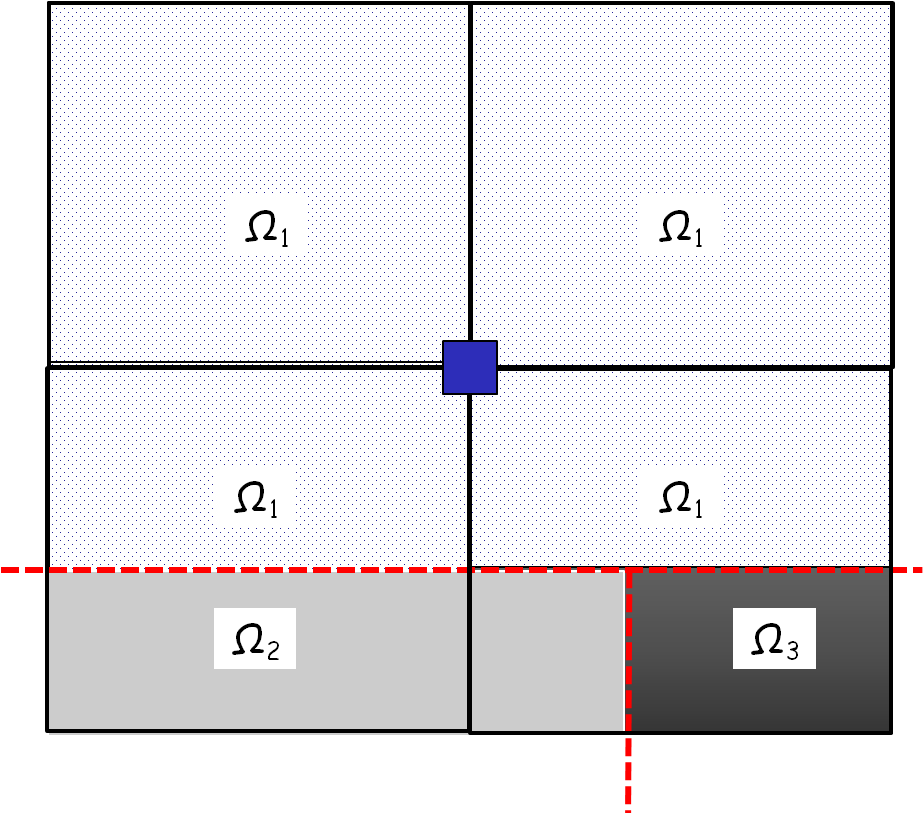

This difficulty makes the association between sign fields and domain partitions non-trivial. This association is necessarily local and must take into account the pollution of sign fields linked to the nearby bands mentioned above. On the example given in the table below, the domains must be associated with the sign field in the following way:

we associate the domain \({\mathrm{\Omega }}_{\text{1}}\) ↔ with the sign field \(\{\text{+1},\text{0},\text{*}\}\)

we associate the domain \({\mathrm{\Omega }}_{\text{2}}\) ↔ with the sign field \(\{\text{-1},\text{-1},\text{*}\}\)

we associate the domain \({\mathrm{\Omega }}_{\text{3}}\) ↔ with the sign field \(\{\text{-1},\text{+1},\text{*}\}\)

Domain partitioning |

Associated sign field |

|

|

To compress the information of the sign vectors, it is preferable to transform the sign vectors using the following reversible coding function:

\(\mathit{code}(\underline{P})=\sum _{\mathit{ifiss}=1}^{\mathit{nfiss}}{3}^{\mathit{nfiss}-\mathit{ifiss}}\left(\mathit{He}(\underline{P},\mathit{ifiss})+1\right)\)

where,

ifiss is the number in the vector sign field,

P designates the current point (a node or a gauss point),

nfiss is the length of the sign field.

Figure 3.2.2-5: Sign vectors have varying lengths, which leads to different identifiers for the same domain. These identifiers then constitute a second level of sub-partitioning.

Given the variability in the nfiss length of the sign vectors, a domain may have a code that is different from one element to another, as illustrated. For example, domain \({\mathrm{\Omega }}_{\text{1}}\) has two identifiers (5 and 6). These identifiers can be thought of as two*subpartitions* of domain \({\mathrm{\Omega }}_{\text{1}}\).

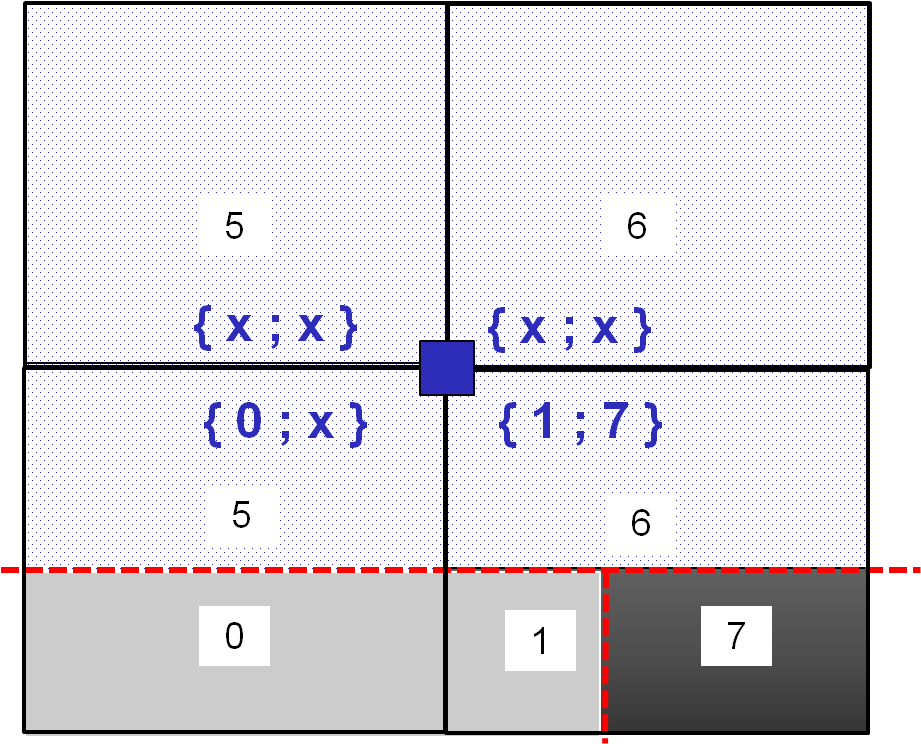

This sub-partitioning is not a problem as long as the information on this sub-partitioning is grouped at the node level, for the assembly of domain « ddls ». To identify these two ddls domains in each element, all you have to do is concatenate the information on the sub-partitions:

If a sub-partition of the complementary domain is found, the information is stored in the dedicated location (data structure by node and by element),

Otherwise, there is no sub-partition for this item.

The result of such an elementary loop is summarized in the table below. In each element we look for a sub-partition of each domain complementary to the blue node. If no sub-partition is found in the element, a cross” x “(or -1 for example) is marked in the dedicated location.

|

|

For the blue node belonging to domain \({\mathrm{\Omega }}_{\text{1}}\), the evaluation of the characteristic functions \({\chi }_{{\Omega }_{\text{2}}}\) or \({\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{3}}}\) is obvious given the partitioning information in the table above. In each element, the first component (of the concatenated field) provides information on the identifier of the first ddl domain \({\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{2}}}\), the second component (of the concatenated field) provides information on the identifier of the second ddl domain \({\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{3}}}\):

If the identifier of the domain to which the Gauss point belongs corresponds to the \(k\in [\mathrm{1,2}]\) component stored at the node, then the characteristic function associated with the \(k\) component takes the value « +2 ». Let us specify again that the component \(k=1\) is associated with the ddl domain \({\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{2}}}\) and the component \(k=2\) is associated with the ddl domain \({\mathrm{\chi }}_{{\mathrm{\Omega }}_{\text{3}}}\). It is important to note that this position does not change from one element to another, to ensure contiguity of the partitioning. For example, let’s say the collection of identifiers {0.1} corresponding to domain \({\mathrm{\Omega }}_{\text{2}}\). This is exactly the information that is stored in the first position in the data structure at the « blue » node, in the elements intersecting domain \({\mathrm{\Omega }}_{\text{2}}\).

Otherwise, the characteristic function takes the value zero if the identifier at the Gauss point does not correspond to the component at the node in the element in question.

Notes:

Note that the ddl domain \({\chi }_{{\Omega }_{\text{1}}}\) is not treated in the example above. In fact, to represent the two discontinuities introduced by the connection of two cracks, it is necessary to enrich the nodes whose support is intersected by the double discontinuity with two discontinuous functions. We then choose to enrich with the ddls associated with the domains complementary to the domain to which the node*belongs. This choice is well suited, taking into account the packaging problems detailed in §* 3.2.6 .

In Code_Aster, we prefer to store Heaviside sign information and domain identifier information at the Gauss point in integration sub-elements.

3.2.3. Enrichment with singular functions (3rd term)#

In order to represent the singularity at the bottom of a crack, we enrich the approximation with functions based on the asymptotic developments of the displacement field in linear elastic fracture mechanics [bib 25]. These expressions have been determined for a plane crack in an infinite medium.

\(\begin{array}{}{u}_{1}=\frac{1}{2\mu }\sqrt{\frac{r}{2\pi }}({K}_{1}\text{cos}\frac{\theta }{2}(\kappa -\text{cos}\theta )+{K}_{2}\text{sin}\frac{\theta }{2}(\kappa +2+\text{cos}\theta ))\\ {u}_{2}=\frac{1}{2\mu }\sqrt{\frac{r}{2\pi }}({K}_{1}\text{sin}\frac{\theta }{2}(\kappa -\text{cos}\theta )+{K}_{2}\text{cos}\frac{\theta }{2}(\kappa -2+\text{cos}\theta ))\\ {u}_{3}=\frac{1}{2\mu }\sqrt{\frac{r}{2\pi }}{K}_{3}\text{sin}\frac{\theta }{2}\\ \mu =\frac{E}{2(1+\nu )}\text{et}\kappa =3-4\nu \text{en}\text{déformations}\text{planes}\text{.}\end{array}\) eq. 3.2.3-1

The hypothesis of plane stresses cannot be retained because no cracked plate is in a situation of plane stresses in the vicinity of the singularity, when one places oneself at a finite distance from the skin of the shell.

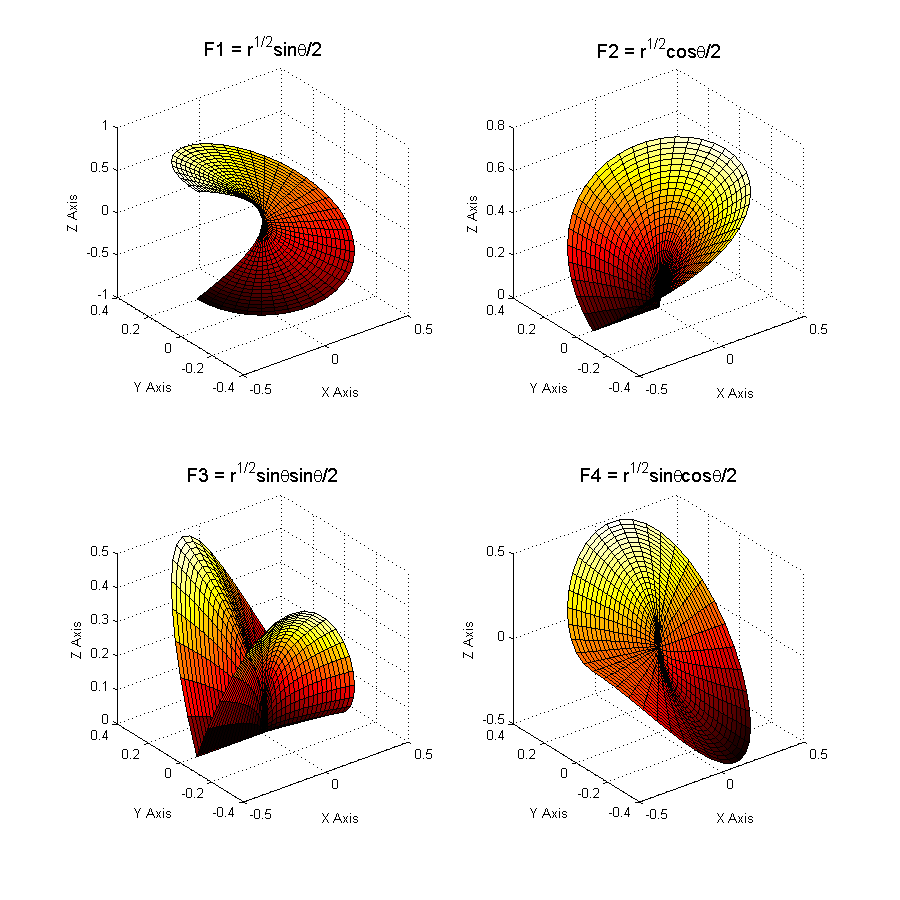

The basis for describing these fields includes 4 functions:

\(\left\{\sqrt{r}\text{cos}\frac{\theta }{2},\sqrt{r}\text{cos}\frac{\theta }{2}\text{cos}\theta ,\sqrt{r}\text{sin}\frac{\theta }{2},\sqrt{r}\text{sin}\frac{\theta }{2}\text{cos}\theta \right\}\).

Like:

\(\{\begin{array}{c}\text{cos}\frac{\theta }{2}\text{cos}\theta =-\text{sin}\theta \text{sin}\frac{\theta }{2}+\text{cos}\frac{\theta }{2}\\ \text{sin}\frac{\theta }{2}\text{cos}\theta =\text{sin}\theta \text{cos}\frac{\theta }{2}-\text{sin}\frac{\theta }{2}\end{array}\)

3.2.3.1. we then choose the following base [2]#

\(F=\left\{\sqrt{r}\text{sin}\frac{\theta }{2},\sqrt{r}\text{cos}\frac{\theta }{2},\sqrt{r}\text{sin}\frac{\theta }{2}\text{sin}\theta ,\sqrt{r}\text{cos}\frac{\theta }{2}\text{sin}\theta \right\}\).

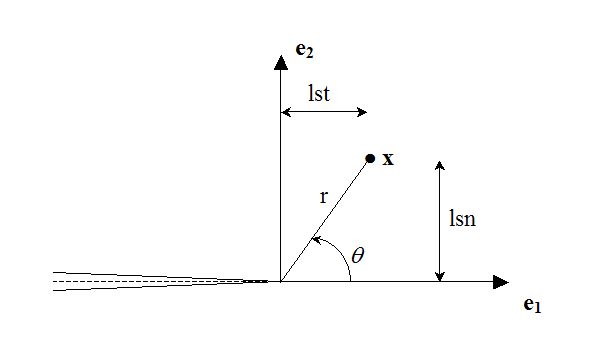

where \((r,\theta )\) are the polar coordinates in the local base at the bottom of the crack (see Figure 2.3-1 and Figure 3.2.3-1).

These coordinates can be easily expressed using level sets, since:

\(r=\sqrt{\text{lsn}\mathrm{²}+\text{lst}\mathrm{²}},\theta =\text{arctan}(\frac{\text{lsn}}{\text{lst}}),\theta \in \left[-\frac{\pi }{2},\frac{\pi }{2}\right]\)

In practice, we use the computer function \(\text{atan2}(\text{lsn},\text{lst})\) instead, which returns the main value of the argument of the complex number \((\text{lst},\text{lsn})\) expressed in radians in the interval \(\left[-\pi ,\pi \right]\).

For points located exactly on the lower lip \((\text{lsn}=0)\), the \(\text{atan2}(\mathrm{0,}\text{lst})\) function gives an angle equal to \(\pi\). In theory, \(\text{atan2}(-\mathrm{0,}\text{lst})\) makes it possible to get \(-\pi\) as expected, but numerically this is not always the case. To overcome this drawback, the following expression is instead used for angle \(\theta\):

\(\theta =H(\text{lsn})\mid \text{atan}2(\text{lsn},\text{lst})\mid ,\theta \in \left[-\pi ,\pi \right]\)

where \(H(\text{lsn})\) is the Heaviside function value. Thus, when one is on the lower lip, the value \(-\pi\) is reached.

Figure 3.2.3-1 : Polar coordinates in the local base

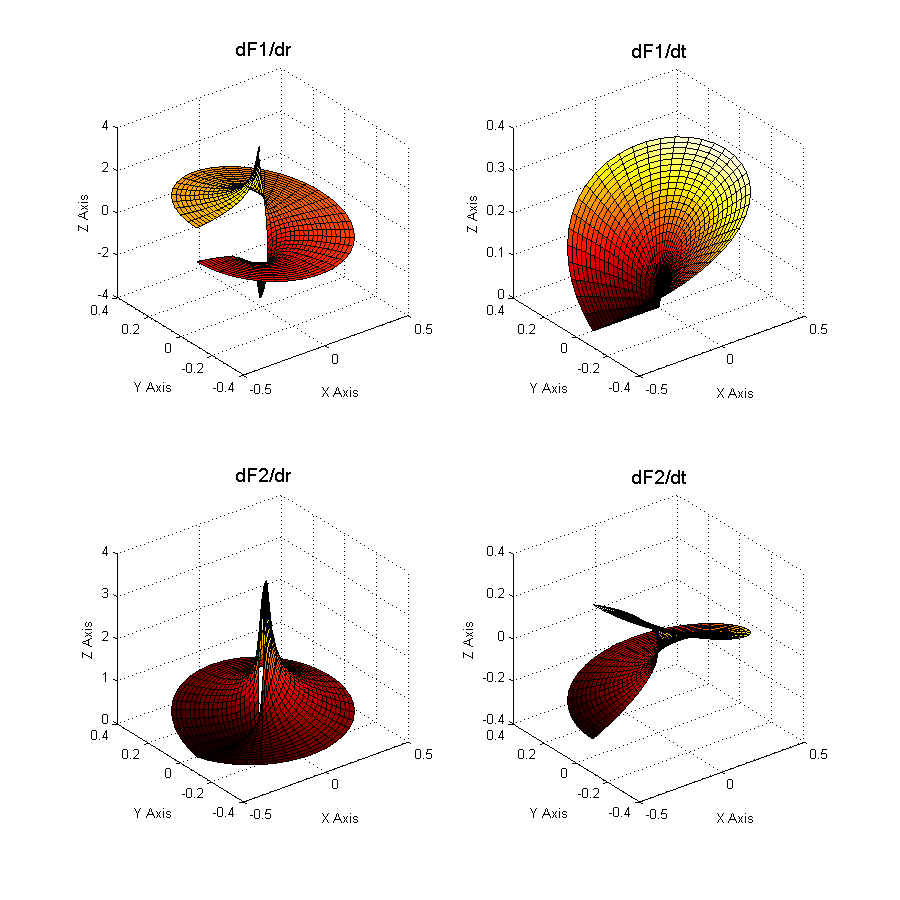

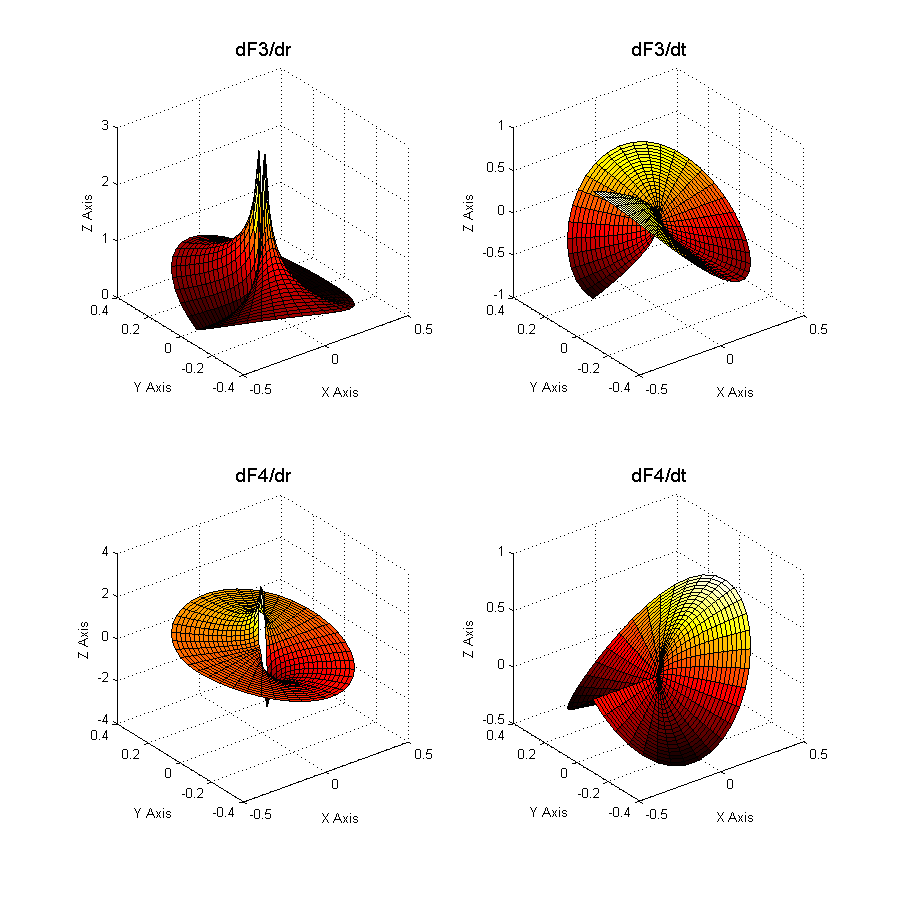

It should be noted that only the first function of the base is discontinuous across the crack. The other features are only added to improve accuracy. These functions are Westergaard solutions, asymptotic analytical solutions to a 2D elastic fracture problem. This base is well suited to 3D cases [bib 16] [bib 18], at least for cracks whose background is fairly regular. These functions are called « singular » because their derivatives are singular in \(r=0\).

Figure 3.2.3-2 : Enrichment functions at the bottom of the crack

Figure 3.2.3-3 : Derivatives of enrichment functions

The \({c}_{k}^{\alpha }\) are the enriched degrees of freedom. \(L\) is the set of nodes whose support is partially cut by the crack bottom (knots represented by a square on Figure 3.2.1-1). This means that a single layer of elements is enriched around the crack bottom. This enrichment is called « topological ».

3.2.4. Geometric enrichment#

From the first papers on X- FEM [bib 24], it was notified that a geometric criterion \({r}_{\text{max}}\) can be defined to determine the nodes enriched by singular functions (see Figure 3.2.1-1):

\(L\mathrm{=}\left\{\text{noeuds}\text{tels}\text{que}r<{r}_{\text{max}}\right\}\)

The first convergence studies were carried out in 2000 as part of GFEM [bib 26], taking into account several layers of elements enriched at the bottom of the crack.

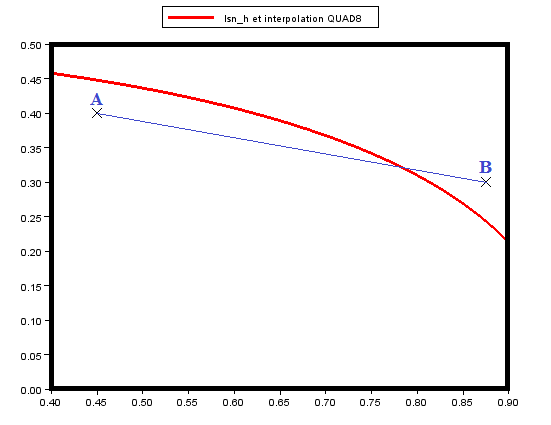

When studying convergence, we are interested in the evolution of the error in relation to the degree of refinement of the mesh. Generally, \(h\) designates a characteristic length of the elements of the mesh, and the aim is to determine the parameter \(\alpha\) called convergence rate (or speed or order), such that the relative error \({e}_{\text{rel}}\) is written in the form:

\({e}_{\mathrm{rel}}={\parallel u-{u}_{h}\parallel }_{{H}^{1}}\underset{h\to 0}{\approx }{\mathrm{Ch}}^{\alpha }\)

where \(C\) is a constant that is independent of \(h\).

Since

\(\text{log}{e}_{\text{rel}}\mathrm{\approx }\text{log}C+\alpha \text{log}h\)

the parameter \(\alpha\) appears as the slope of the line \(\text{log}{e}_{\text{rel}}\) as a function of \(\text{log}h\) when \(h\) tends to 0.

Stazi et al. [bib 27] studies the convergence of the error in energy for an infinite plate with a right crack, in mode I, for linear and quadratic formulations. He notes that the quadratic improves the error, but not the convergence rate. Béchet et al. [bib 28] confirms this observation and shows that a fixed enrichment zone makes it possible to find an almost optimal convergence rate.

At the same time, Laborde*et al.* [bib 29] deepens the question, and tests convergence rates for higher-order polynomial formulations. In addition, it brings improvements in order to regain an optimal rate, or even a superconvergence. The Tableau 3.2.4-1 brings together the results obtained by Laborde for different variants of X- FEM, and this for polynomial approximations of degree \(k=\mathrm{1,2}\mathrm{,3}\).

FEM |

X- FEM |

X- |

X- FEM (f. a. ) |

X- FEM (d.g. ) |

X- FEM (p.m.) |

|

P1 |

0.5 |

0.5 |

0.5 |

0.5 |

0.5 |

1.1 |

P2 |

0.5 |

0.5 |

0.5 |

1.8 |

1.5 |

2.2 |

P3 |

0.5 |

0.5 |

0.5 |

2.6 |

3.3 |

Table 3.2.4-1 : Order of convergence of the different variants of X- FEM

The first column corresponds to the orders of convergence of the classical finite element method for a cracking problem. Given the singularity, the convergence speed is in \(\sqrt{h}\) regardless of the \(k\) degree. The 2D simulations carried out on a test problem: rectilinear crack on a square in opening mode I show that X- FEM does not improve the convergence rate. This can be explained by the fact that topological enrichment only concerns a single layer of elements at the bottom of the crack. The area of influence of this enrichment is therefore strongly linked to \(h\). Thus, when \(h\) tends to 0, the size of the enrichment’s area of influence also tends to 0. The idea that seems natural is then to no longer limit the enrichment zone to a single layer of elements, but to expand it to an area of fixed size, independent of the refinement of the mesh. The 3rd column of Tableau 3.2.4-1 shows the results obtained with this method called X- FEM f. a. (for Fixed Enrichment Area). We are almost back to the expected convergence rates (\(\alpha =k\) for an approximation \(\mathit{Pk}\)). However, the packaging is degraded compared to the usual X- FEM method. In order to regain acceptable conditioning, Laborde proposes to bring together the degrees of freedom enriched by the singular functions. Clearly, instead of having different rich ddls for each enriched node, they are globalized in order to have only one for each singular function and per crack (but in 3D, you can’t see how it works in 3D). With this arrangement, conditioning is greatly improved, but convergence rates are lower (X- FEM d. g.). The problem comes from the transition elements between the enriched zone and the non-enriched zone. According to Laborde, the phenomenon is explained by the effect of the partition of the unit that cannot be used on these partially enriched elements. To overcome this defect, an ultimate version is proposed: X- FEM p.m. (for Pointwise Matching). The movements of the nodes on the border between the enriched zone and the non-enriched zone are imposed equal. Thanks to this integration, we obtain expected convergence rates (or even a slight super-convergence).

Note:

For polynomial approximations of degree \(k=\mathrm{2,3}\), Laborde et al. [bib 29 ] like many others use degree form functions \(k\) for classical terms and enriched by discontinuity functions (2nd term), so that the jump is of degree \(k\) . On the other hand, for the terms enriched by the singular functions (3rd term), it is sufficient to use the linear form functions to capture the singularity at the bottom of the crack and not to excessively deteriorate the conditioning of the matrices.

Currently, in Code_Aster, only a degree 1 approximation is possible, and the fact of entering or not entering a radius value for the enrichment zone (keyword RAYON_ENRI) makes it possible to place ourselves respectively in the X- FEM (f. a.) or X- FEM classical frame.

3.2.5. Enrichment in Code_Aster#

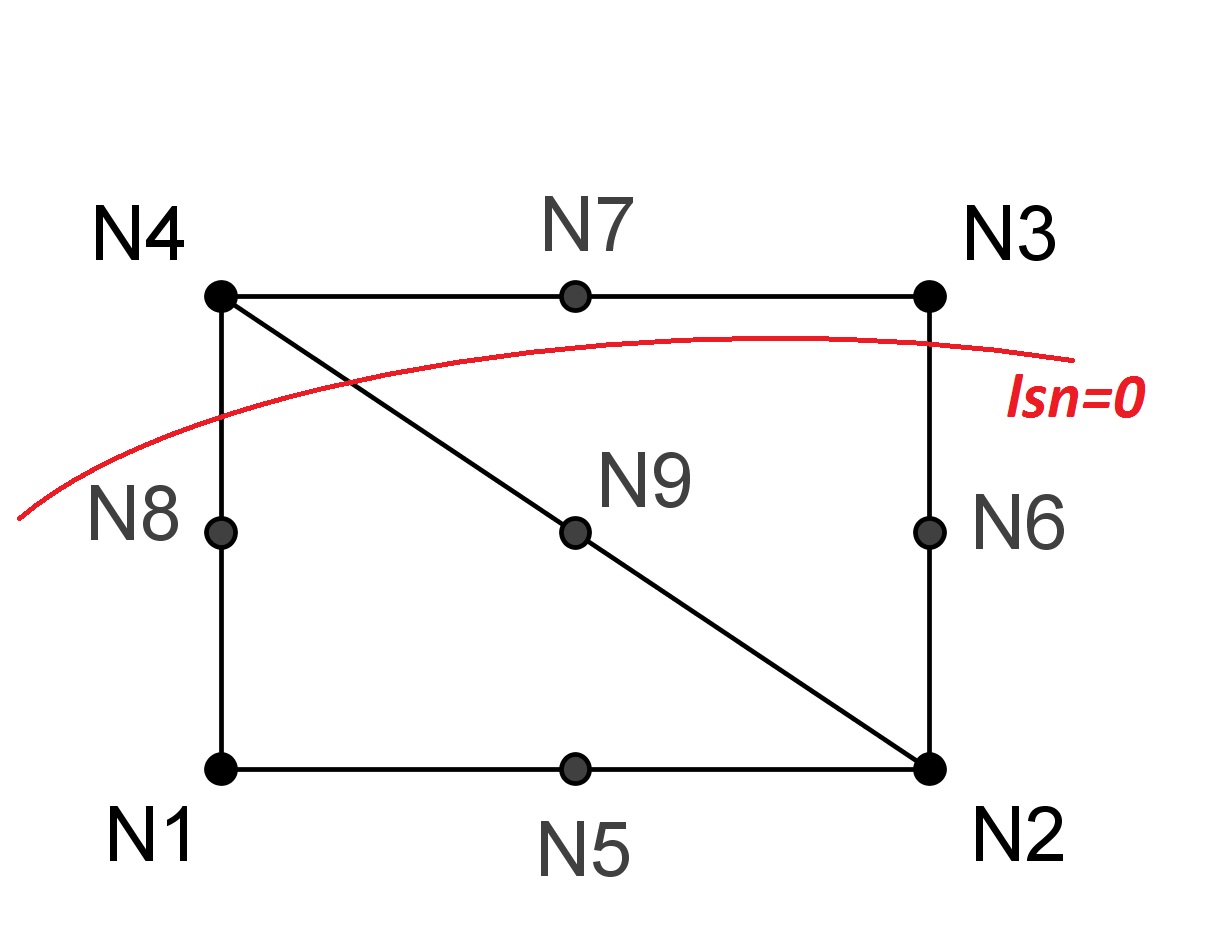

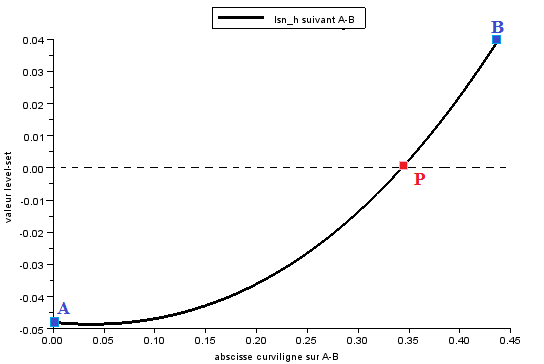

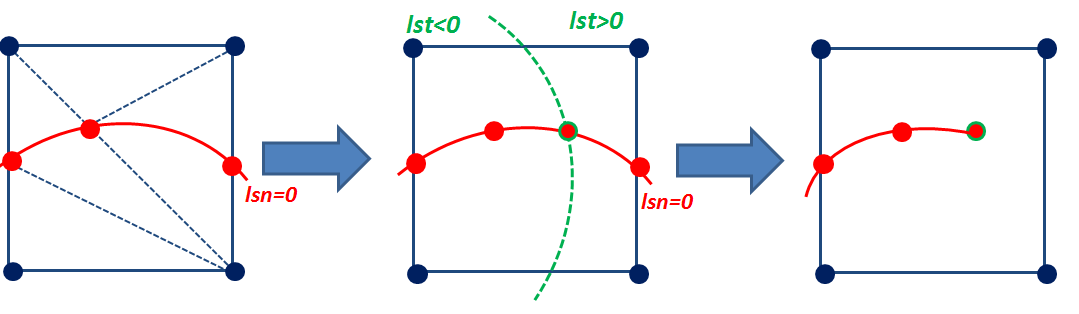

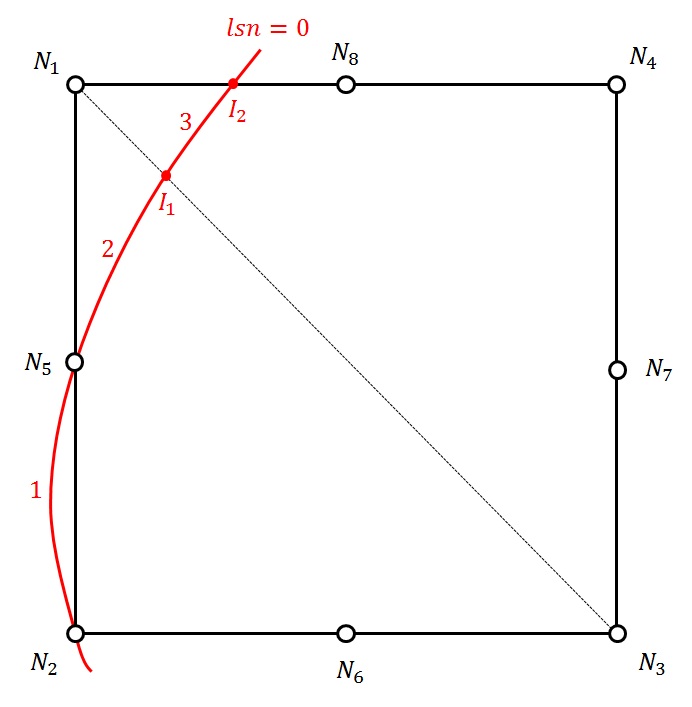

To find out if a node is enriched by the Heaviside function (« Heaviside » node) or by the singular functions (« crack-tip » node), we calculate the min and the max of the normal level set on all the nodes belonging to the node’s support (the concept of « support » is defined in paragraph [§ 3.2]), and we calculate the min and max of the tangent level set on all the points belonging to the node’s support (the concept of « support » is defined in paragraph [§]), and we calculate the min and max of the level set tangent on all the points belonging to the node’s support (the concept of « support » is defined in paragraph [§]), and we calculate the min and max of the tangent level set on all the points belonging to the node’s support to the support of the node in question where the normal level set is cancelled.

\(\begin{array}{c}j\in K\iff \left(\underset{x\in {N}_{n}(j)}{\text{min}}(\text{lsn}(x))\underset{x\in {N}_{n}(j)}{\text{max}}(\text{lsn}(x))<0\right)\text{et}\left(\underset{x\in {N}_{n}(j)\cap \text{lsn}(x)=0}{\text{max}}(\text{lst}(x))<0\right)\\ k\in L\iff \left(\underset{x\in {N}_{n}(j)}{\text{min}}(\text{lsn}(x))\underset{x\in {N}_{n}(j)}{\text{max}}(\text{lsn}(x))\le 0\right)\text{et}\left(\underset{x\in {N}_{n}(j)\cap \text{lsn}(x)=0}{\text{min}}(\text{lst}(x))\underset{x\in {N}_{n}(j)\cap \text{lsn}(x)=0}{\text{max}}(\text{lst}(x))\le 0\right)\end{array}\)

Similar ideas appear in [bib 17], but it seems that some scenarios were not taken into account. These expressions are the culmination of the first efforts [bib 30] which aimed to determine the types of enrichment using level sets only. The reader will find the corresponding explanatory figures there.

In the case of geometric enrichment at the bottom of a crack, the criterion for selecting nodes is as follows:

\(k\in L\iff \sqrt{\text{lsn}(x)\mathrm{²}+\text{lst}(x)\mathrm{²}}\le {r}_{\text{max}}\)

Regarding the choice of the value of the enrichment radius, nothing is clearly indicated in the literature. However, it seems that a radius equal to between \(1\mathrm{/}5\) and \(1\mathrm{/}10\) of the length of the crack is a relevant choice.

Recent studies have shown that since geometric enrichment greatly degrades the conditioning of the stiffness matrix, it was necessary to limit it in a restricted area around the bottom of the crack, while waiting for treatment to improve the conditioning. An alternative is proposed, which is halfway between topological and geometric enrichment: an enrichment on \(n\) layers [bib 31]. In this case, we calculate a \({r}_{\mathit{max}}\) (unique for each crack) based on the user data for the number of layers, then we apply the previous formula.

Algorithm for choosing node enrichment:

Let \(\mathit{MAFIS}\) be the set of stitches on which the normal level set is cancelled

loop on all \(P\) nodes of the mesh

initialization of the max and min of the level sets

Let \(A\) and \(B\) be the two ends of the segment

If \(\text{lsn}\left(A\right)=0\) then

Update \(\text{maxlst}\) and \(\text{minlst}\) with \(\text{lst}(A)\) if necessary

End yes

If \(\text{lsn}(B)=0\) then

Update \(\text{maxlst}\) and \(\text{minlst}\) with \(\text{lst}(B)\) if necessary

End yes

If \(\text{lsn}(A)\text{lsn}(B)<0\) then

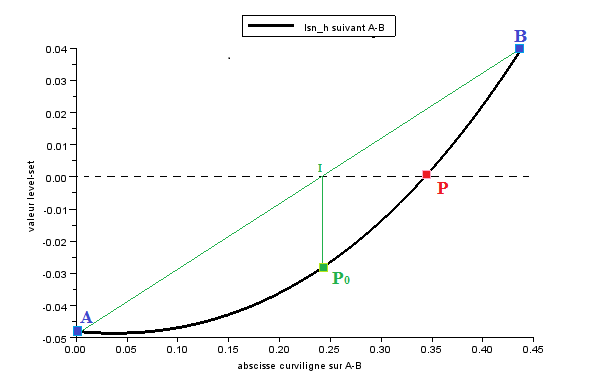

in the case of linear interpolation

\(C=A-\frac{\text{lsn}(A)}{\text{lsn}(B)-\text{lsn}(A)}\left(B-A\right)\) eq. 3.2.5.1-1

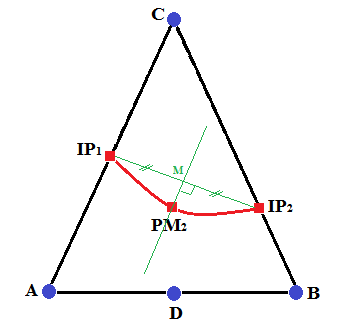

in the case of quadratic interpolation

The coordinate in reference space \(\xi\) of the intersection point \(C\) with the curve \(\text{lsn}=0\) on the edge is determined. Its \(\mathit{lst}\) is then given by:

\(\mathit{lst}(C)=\frac{\mathit{lst}(A)\ast \xi \ast (\xi -1)}{2}+\frac{\mathit{lst}(B)\ast (\xi +1)\ast \xi }{2}-\mathit{lst}(M)\ast (\xi -1)\ast (\xi +1)\)

eq. 3.2.5.1-2

Update \(\text{maxlst}\) and \(\text{minlst}\) with \(\text{lst}(C)\) if necessary.

End yes

Update \(\text{maxlsn}\) and \(\text{minlsn}\) if necessary

If \((\text{minlsn}\text{.}\text{maxlsn}<0)\) and \((\text{maxlst}\mathrm{\le }0)\) then \(P\in K\)

topological enrichment cases

If \((\text{minlsn}\text{.}\text{maxlsn}\mathrm{\le }0)\) and \((\text{minlst}\text{.}\text{maxlst}\mathrm{\le }0)\) then \(P\in L\)

case of geometric enrichment:

If \(\sqrt{\text{lsn}{(P)}^{2}+\text{lst}{(P)}^{2}}\mathrm{\le }{r}_{\text{max}}\) then \(P\mathrm{\in }L\)

end loop

To obtain the equation [éq. 3.2.5.1‑1], as well as the value of the level set tangent to the point \(C\), we first determine the curvilinear abscissa \(s\) such that

\(C=A+s(B-A)\)

thanks to the fact that the normal level set is cancelled in \(C\), i.e.

\(\text{lsn}(C)\mathrm{=}\text{lsn}(A)+s(\text{lsn}(B)\mathrm{-}\text{lsn}(A))\mathrm{=}0\)

We deduce the expression for point \(C\) as well as the value of the tangent level set in \(C\):

\(\begin{array}{c}\text{lst}\left(C\right)=\text{lst}\left(A\right)+s\left(\text{lst}\left(B\right)-\text{lst}\left(A\right)\right)\\ =\text{lst}\left(A\right)-\frac{\text{lsn}\left(A\right)}{\text{lsn}\left(B\right)-\text{lsn}\left(A\right)}\left(\text{lst}\left(B\right)-\text{lst}\left(A\right)\right)\end{array}\)

To obtain, in the quadratic case, the equation [éq. 3.2.5.1‑2], as well as the value of the level set tangent to the point \(C\), the coordinate in the reference space \(\xi\) of the point \(C\) is determined beforehand

\(\mathit{lsn}\) is interpolated quadratically along the edge. It is therefore a question of solving a polynomial of the second degree:

\(0=\frac{\mathit{lsn}(A)\ast \xi \ast (\xi -1)}{2}+\frac{\mathit{lsn}(B)\ast (\xi +1)\ast \xi }{2}-\mathit{lsn}(M)\ast (\xi -1)\ast (\xi +1)\)

Equation \(\mathit{lsn}=0\) has a unique solution on the edge because \(\text{lsn}(A)\text{lsn}(B)<0\)

Once the coordinate \(\xi\) of the point \(C\) is obtained, we interpolate the \(\mathit{lst}\) of the points \(A\), \(B\) and \(M\) to obtain the \(\mathit{lst}\) of the point \(C\):

\(\mathit{lst}(C)=\frac{\mathit{lst}(A)\ast \xi \ast (\xi -1)}{2}+\frac{\mathit{lst}(B)\ast (\xi +1)\ast \xi }{2}-\mathit{lst}(M)\ast (\xi -1)\ast (\xi +1)\)

Note:

The same node can belong to sets \(K\) and \(L\) .

In Code_Aster, it is necessary to define specific types of finite elements, and in order not to multiply the number of possibilities, the choice was made to define 3 types of finite elements X- FEM: the « Heaviside » elements, the « crack-tip » elements and the mixed « Heaviside and crack-tip » elements.

If the mesh has at least one « Heaviside » type node, then it is a « Heaviside » mesh.

If the mesh has at least one « crack-tip » type node, then it is a « crack-tip » mesh.

If the mesh has at least one « Heaviside » node and at least one « crack-tip » node, or if the mesh contains at least one « Heaviside and crack-tip » node, then it is a « Heaviside and crack-tip » mesh.

Note \(\mathit{GRMAEN1}\) the « Heaviside » mesh, \(\mathit{GRMAEN2}\) the « crack-tip » mesh, and the « Heaviside » mesh and the « crack-tip » mesh. \(\mathit{GRMAEN3}\)

Let’s say mesh \(i\), and \(\text{Ni}\) the set of knots \(j\) of stitch \(i\).

\(\begin{array}{c}\text{si}\left(\exists j\in \text{Ni},\text{tel}\text{que}j\in K\right)\text{alors}i\in \text{GRMAEN}1\\ \text{si}\left(\exists j\in \text{Ni},\text{tel}\text{que}j\in L\right)\text{alors}i\in \text{GRMAEN}2\\ \text{si}\left(\exists (j,k)\in {\text{Ni}}^{2},\text{tels}\text{que}j\in K\text{et}k\in L\right)\text{ou}\left(\exists j\in \text{Ni},\text{tel}\text{que}j\in K\cap L\right)\text{alors}i\in \text{GRMAEN}3\end{array}\)

We note that all the nodes of an element will be affected by the same characteristics and the same enrichment, but this is not necessarily what is wanted. It is therefore necessary to cancel out the degrees of freedom enriched « in excess ».

As we saw in the previous paragraph, a « Heaviside » mesh can only include one « Heaviside » type node, for example, the other nodes of the mesh being conventional nodes that do not require any enrichment. However, these nodes will be affected by the degrees of freedom of the « Heaviside » mesh, and therefore by enriched degrees of freedom. Therefore, it is necessary to cancel these degrees of freedom that were wrongly enriched. Cancellation actually makes it possible to move continuously from an enriched zone to a non-enriched zone and allows two types of elements to coexist (in the sense that they share a common border), one enriched, the other not enriched. The unenriched variable is the same on the common border and the degrees of freedom corresponding to the enrichment are set to zero for the element that is enriched on this same border (but not elsewhere in this same element). This way of doing things avoids having to solve the question of « blending elements », which can be treated in [bib 73].

Several cases occur:

Heaviside degrees of freedom to be cancelled at the classic knots of a Heaviside or mixed mesh

degrees of freedom crack-tip to be cancelled at the classic knots of a crack-tip or mixed mesh

Heaviside degrees of freedom to cancel at the crack-tip nodes of a mixed mesh

crack-tip degrees of freedom to be cancelled at Heaviside nodes in a mixed mesh.

The technique for cancelling these degrees of freedom is explained in detail in [R5.03.54, §4.4].

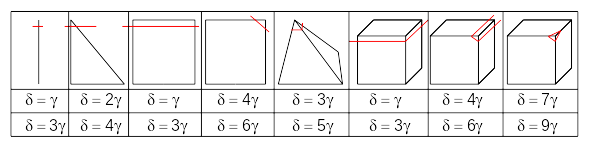

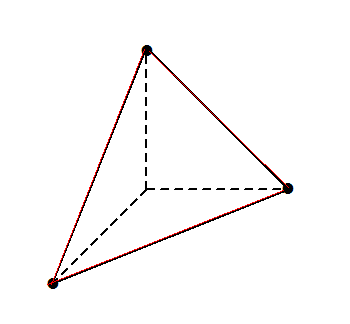

3.2.6. Enrichment packaging#

Conditioning, noted \({10}^{\delta }\), corresponds to the ratio between the largest and the smallest eigenvalue of a system to be inverted. For a double-precision calculation with a numerical error in \({10}^{\mathrm{-}15}\), the relative error obtained on the calculation is of the order of \({10}^{\mathrm{-}15+\delta }\). We must therefore verify condition \(\delta <9\) to guarantee numerical precision in the order of \({10}^{\mathrm{-}6}\).

Geometric enrichment greatly degrades the conditioning of the stiffness matrix [bib 29], [bib 28]. Béchet et al. [bib 28] propose a technique for orthogonalizing degrees of freedom when calculating elementary stiffness matrices in order to improve the conditioning of the assembled matrix. Laborde et al. [bib 29] explain that the poor conditioning is due to the fact that the chosen enrichment base does not form a free family locally. They therefore propose to put a single degree of freedom for these functions over the entire enrichment zone and to connect the movements to the limit between enriched and non-enriched zones in order to find optimal convergence rates. Moreover, the conditioning problem is such that with quadratic elements it becomes impossible to obtain results, without implementing one of the techniques [bib 29], [bib 28]. Indeed, for these elements, the poor conditioning is due not only to the singular part of the enrichment, but also to Heaviside enrichment, when the crack passes very close to a node. The evolution of the number of packages as the interface approaches the nodes of the mesh is shown in the figure, for linear and quadratic elements respectively. The values in this figure are very approximate. On the one hand, we do not take into account the elements of the neighborhood. On the other hand, these values are obtained in a rough manner. In the case of the cube cut in a corner for example (on the right in the figure), we consider the stiffness of the small cube on the corner of the side  \({10}^{\mathrm{-}\gamma }\) instead of the small tetrahedron at the corner. Finally, the conditioning of a global problem is generally greater than the local conditioning and increases when the mesh is refined. By taking into account all these considerations, in practice, packages of an order of 2 to 4 times greater than those in the figure are obtained.

Figure 3.2.6.1-1: the distance to the nearest vertex node normalized by the length of the side equal to \({10}^{\mathrm{-}\gamma }\) we show the dependence of \(\delta\) conditioning number, on the parameter \(\gamma\) for the linear elements in the upper part and quadratic in the lower part.

The technique of readjusting the level set mentioned in paragraph [§ 2.2.4] makes it possible to prevent this poor conditioning. Noting \({10}^{-\gamma }\) the distance from the intersection point of the level set to the nearest vertex node normalized by the length of the side, the adjustment to 1% of the edge length made in paragraph [§ 2.2.4] corresponds to \(\gamma \mathrm{=}2\). This readjustment must act quickly enough so that the conditioning is not too damaged, but not for values of \(\gamma\) that are too low so as not to disturb the system by unrealistically displacing the cracking surface. For quadratic hexahedral elements, if \({10}^{\mathrm{-}15+9\gamma +2}\) must be of the order of \({10}^{\mathrm{-}5}\), we obtain \(9\gamma +2\mathrm{=}10\), which is a readjustment to 13% of the edge length. It is therefore not possible in this case to reasonably activate the readjustment of the level sets at the top, so that the conditioning is not damaged.

Under these conditions, a criterion is set up to detect whether a Heaviside degree of freedom is a problem. If the criterion is met, the degree of freedom is eliminated by zeroing out, as in paragraph [§ 3.2.5.3].

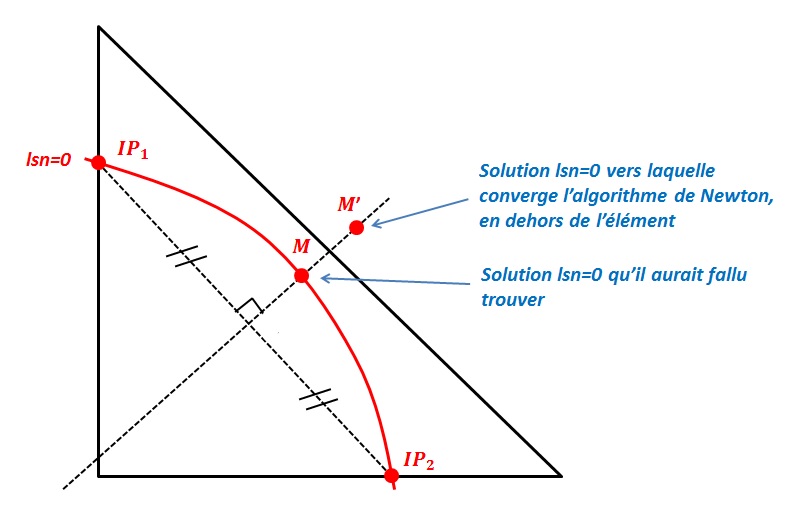

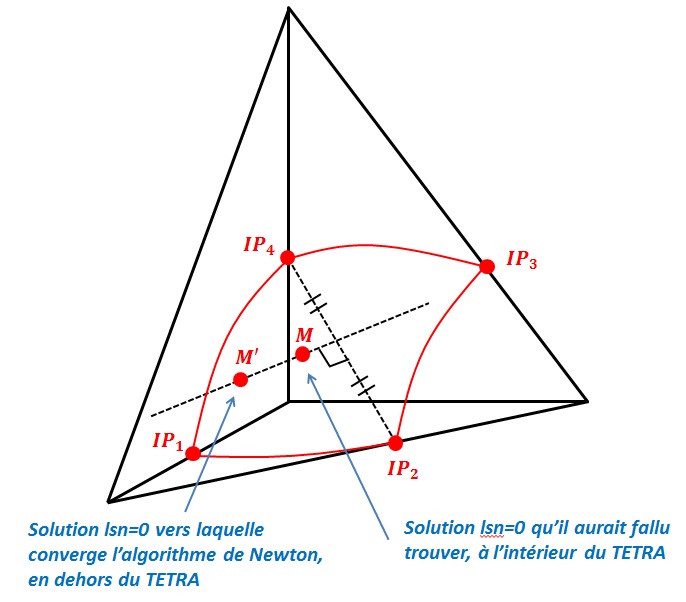

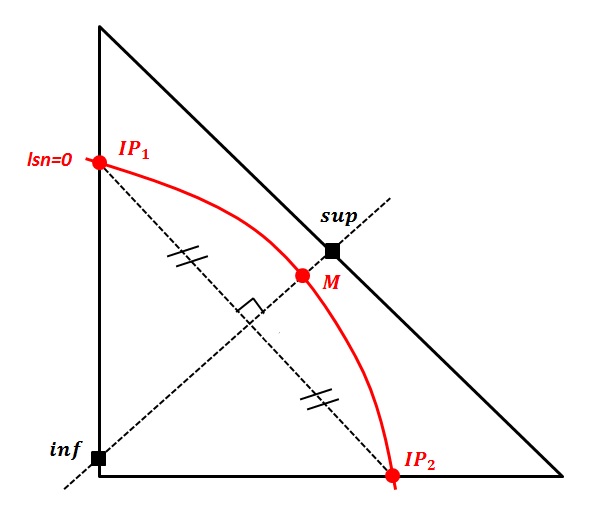

The principle is the same as the volume criterion proposed by Daux [bib 22] for junctions. The idea is to look for each node whose support is cut by the level set, the ratio of the sizes of the zones on both sides of the level set on this support (areas affected by a Heaviside value of ±1). If:

\(\frac{\mathit{min}({V}_{\mathrm{-}1},{V}_{+1})}{{V}_{\mathit{support}}}\mathrm{\le }{10}^{\mathrm{-}\alpha }\) eq, 3.2.6.2-1

the Heaviside degrees of freedom of the node in question are set to zero in all directions.

Figure ‑3.2.6.2-1: illustration of the implementation of the volume criterion. The support for the node cut by the level set is shown. We compare the gray and white volumes with respect to the total volume of the node support (meeting of the gray and white volumes) in the case of a crack (left) and a junction (right).

While this criterion is relevant for linear elements (triangles, tetrahedra) with a value of \(\alpha\) of 4, it is not satisfactory for multi-linear elements (quadrangles, pyramids, pentahedra, hexahedra) and quadratic elements. In fact, the value of \(\alpha\) of 4 leads to the elimination of degrees of freedom that should not be eliminated, which disrupts the solution, while higher values of \(\alpha\) degrade the conditioning. In order to take these elements into account, a stiffness criterion is used, which is based on a comparison of the rigidities of the support and no longer simply of the volumes. For each node whose support is cut by the level set, for this support, we look at the ratio of the rigidities of the zones on both sides of the level set (areas affected by a Heaviside value of ±1). If in a \(n\) node where \(\mathit{se}\) are the sub-elements of its support, we have:

\(\frac{\mathit{min}({\mathrm{\sum }}_{{\mathit{se}}_{\mathrm{-}1}}{\mathrm{\int }}_{{\Omega }_{\mathit{se}}}{\mathrm{\parallel }{\phi }_{n,X}\mathrm{\parallel }}^{2}d{\Omega }_{\mathit{se}},{\mathrm{\sum }}_{{\mathit{se}}_{+1}}{\mathrm{\int }}_{{\Omega }_{\mathit{se}}}{\mathrm{\parallel }{\phi }_{n,X}\mathrm{\parallel }}^{2}d{\Omega }_{\mathit{se}})}{{\mathrm{\sum }}_{\mathit{se}}{\mathrm{\int }}_{{\Omega }_{\mathit{se}}}{\mathrm{\parallel }{\phi }_{n,X}\mathrm{\parallel }}^{2}d{\Omega }_{\mathit{se}}}\mathrm{\le }{10}^{\mathrm{-}\delta }\) eq 3.2.6.2-2

where \({\phi }_{n,X}\) is the derivative of the shape function at node \(n\) in the global direction \(X\), then the Heaviside degrees of freedom at node \(n\) are set to zero in all directions. It will be noted that the behavior is not present in the stiffness criterion of eq, the criterion having been standardized. This makes it possible to discriminate in advance the degrees of freedom to be eliminated, without knowing the problem to be solved (non-linearities, plasticity, contact, etc.). This criterion, which is very similar to a conditioning criterion, leads us to choose values of \(\delta\) between 8 and 10. In practice, we take a value of \(\delta\) out of 9.

The criterion described in eq quantifies, in order of magnitude, the compromise between quality of the solution and packaging. As this criterion is only valid in terms of magnitude, it is not necessarily relevant to calculate all the integrals exactly. In Aster programming, we therefore approximate the integral calculation on the sub-elements ([bib 72]):

\({\int }_{{\Omega }_{\mathrm{se}}}{\parallel {\phi }_{n,X}\parallel }^{2}d{\Omega }_{\mathrm{se}}\approx \text{}{\parallel {\phi }_{n,X}({G}^{\mathrm{se}})\parallel }_{\infty }^{2}{V}^{\mathrm{se}}\) eq 3.2.6.2-3

where \({G}^{\mathrm{se}}\) refers to the barycenter of the sub-element, \({V}^{\mathrm{se}}\) the volume of the sub-element.

Instead of calculating the integral on the sub-element, we evaluate the derivatives of form functions at one point. This strategy makes it possible to capture the criterion for eliminating eq in order of magnitude, with a low calculation cost.

This approximation is robust and inexpensive with linear elements.

On the other hand, with quadratic elements, the derivatives of form functions admit roots. The calculation at one point is risky: the barycenter may be a root of the derivatives of form functions, where the estimate will be zero, but the integral on the sub-element will not be. This poor estimate can lead to random eliminates, which disrupts the solution and is not an acceptable step.

We therefore strengthen the estimate for the quadratic elements, by adding other evaluation points, in addition to the barycenter:

\({\int }_{{\Omega }_{\mathrm{se}}}{\parallel {\phi }_{n,X}\parallel }^{2}d{\Omega }_{\mathrm{se}}\approx \text{}{\mathrm{max}}_{{P}_{i}\in {\Omega }_{\mathrm{se}}}({\parallel {\phi }_{n,X}({P}_{i})\parallel }_{\infty }^{2}){V}^{\mathrm{se}}\) eq 3.2.6.2-4

For the evaluation to be relevant, the points are sufficiently well distributed: we consider the points equidistant between the vertex nodes and the barycenter (for example, 3 points for a tri6).

\(\text{{}{P}_{i}\text{}}=\text{{}{G}^{\mathrm{se}}\text{}}\cup \text{{}\frac{{\mathrm{Node}}_{i}+{G}^{\mathrm{se}}}{2}\text{}}\) eq 3.2.6.2-5

However, the following criticisms can be made in relation to these estimated criteria:

on the one hand, they do not guarantee the control of the packaging just before disposal. As long as the elimination is not performed, the conditioning increases in \({10}^{n\times \gamma }\) (figure), which can lead to the stopping of the direct solver, in an arbitrary manner. This phenomenon is exacerbated with quadratic elements, or even figures.

on the other hand, an analysis of these various criteria is done in [bib 72]. It shows that the removals lead to an absence of convergence of the energy error on a simple example of homogeneous compression of a cube crossed by an inclined interface. The proposed solution consists in replacing the elimination of Heaviside degrees of freedom by an orthogonalization of the local stiffness matrices, an idea that comes from the preconditioner X- FEM of [bib 28]. In order to overcome this last difficulty, it is necessary to estimate local stiffness matrices at least in triple precision [bib 72].

To address the first criticism, that is to say to stem the exponential increase in conditioning, we have implemented in the Code_Aster, the preconditioner proposed by Béchet et al. [bib 28].

This is an automatic and algebraic procedure, to « orthogonalize » the degrees of freedom associated with a XFEM node. In fact, the enrichment functions XFEM rely on the nodal functions \({\Phi }_{i}\) to describe either the discontinuity of the displacement by the jump functions \(H{\Phi }_{i}\), or the crack background by the singular functions \({F}^{\alpha }{\Phi }_{i}\). The functions introduced by enrichment XFEM are not orthogonal to the shape functions in each node XFEM. In addition, the enrichment functions XFEM and the nodal functions share the same support: there are situations where the functions XFEM are similar to the nodal functions, to the point of becoming almost collinear.

Information on collinearity is carried in stiffness matrix \(K\): stiffness matrix conditioning increases if collinearity increases at least in one XFEM node. From a more formal point of view, the stiffness matrix (cf.§ 3.5.1) derives from the discretization of a positive symmetric bilinear form. So, there is a scalar product \({\langle \mathrm{.},\mathrm{.}\rangle }_{K}\) such that, the term in the stiffness matrix \({K}_{i,x}\) representing the collinearity between the nodal function \({\Phi }_{i}\) and the enrichment function \({F}_{x}{\Phi }_{i}\), can be rewritten:

\({K}_{i,x}={\langle {\Phi }_{i},{F}_{x}{\Phi }_{i}\rangle }_{K}\) eq 3.2.6.3-1

Béchet [bib 28] proposes the construction of a pre-conditioner such as:

\(K\to \stackrel{̃}{K}={P}_{c}^{T}K{P}_{c}\) eq 3.2.6.3-2

The original matrix system is then transformed in the following manner:

\(Ku=f\to \{\begin{array}{c}\stackrel{̃}{K}\stackrel{̃}{u}=\stackrel{̃}{f}\\ \stackrel{̃}{f}={P}_{c}^{T}f\\ u={P}_{c}\stackrel{̃}{u}\end{array}\) eq 3.2.6.3-3

Thus, Béchet [bib 28] builds the new matrix \(\stackrel{̃}{K}\) to respect the following orthogonality condition:

\({\stackrel{̃}{K}}_{i,x}={\langle {\Phi }_{i},{F}_{x}{\Phi }_{i}\rangle }_{\stackrel{̃}{K}}={\langle {P}_{c}{\Phi }_{i},{P}_{c}{F}_{x}{\Phi }_{i}\rangle }_{K}=0\) eq 3.2.6.3-4

In other words, the new functional base \(\left({P}_{c}{\Phi }_{i},{P}_{c}{F}_{x}{\Phi }_{i}\right)\) (through the change of base \({P}_{c}\)) is orthogonal, on the support of each node XFEM. Therefore, the new matrix system to be inverted \(\stackrel{̃}{K}\stackrel{̃}{u}={P}_{c}^{T}f\) is better conditioned regardless of the position of the interface, cf. § 3.2.6.2.

The construction of \(\stackrel{̃}{K}\) is not a unique step. [bib 28] proposes to use the Cholesky factorization of local stiffness matrices, associated with each XFEM node:

\({K}_{\mathit{loc}}^{i}=\left[\begin{array}{ccc}{\langle {\Phi }_{i},{\Phi }_{i}\rangle }_{K}& {\langle {\Phi }_{i},H{\Phi }_{i}\rangle }_{K}& {\langle {\Phi }_{i},{F}^{\alpha }{\Phi }_{i}\rangle }_{K}\\ {\langle {\Phi }_{i},H{\Phi }_{i}\rangle }_{K}& {\langle H{\Phi }_{i},H{\Phi }_{i}\rangle }_{K}& {\langle H{\Phi }_{i},{F}^{\alpha }{\Phi }_{i}\rangle }_{K}\\ {\langle {\Phi }_{i},{F}^{\alpha }{\Phi }_{i}\rangle }_{K}& {\langle H{\Phi }_{i},{F}^{\alpha }{\Phi }_{i}\rangle }_{K}& {\langle {F}^{\alpha }{\Phi }_{i},{F}^{\alpha }{\Phi }_{i}\rangle }_{K}\end{array}\right]\) eq 3.2.6.3-5

The matrix \({K}_{\mathit{loc}}^{i}\) is symmetric definite positive. It therefore admits a factorized Cholesky, that is to say, that there is an upper triangular matrix \({S}_{i}\) such that:

\({K}_{\mathit{loc}}^{i}={S}_{i}^{T}{S}_{i}\) eq 3.2.6.3-6

In an optimal storage configuration contiguous with degrees of freedom, [bib 28] then chooses one diagonal preconditioner per block, such as:

\({P}_{c}=\left[\begin{array}{cccccc}{P}_{c}^{1}& 0& 0& \mathrm{...}& \mathrm{...}& \mathrm{...}\\ 0& {P}_{c}^{2}& 0& \mathrm{...}& 0& \mathrm{...}\\ 0& 0& {P}_{c}^{3}& \mathrm{...}& \mathrm{...}& \mathrm{...}\\ \mathrm{...}& \mathrm{...}& \mathrm{...}& \ddots & \mathrm{...}& \mathrm{...}\\ \mathrm{...}& 0& \mathrm{...}& \mathrm{...}& {P}_{c}^{i}& \mathrm{...}\\ \mathrm{...}& \mathrm{...}& \mathrm{...}& \mathrm{...}& \mathrm{...}& \ddots \end{array}\right]\) eq 3.2.6.3-7

where \({P}_{c}^{i}=\{\begin{array}{c}{S}_{i}^{-1}\text{si}i\text{est un noeud XFEM}\\ {I}_{d}\text{sinon}\end{array}\) eq 3.2.6.3-8

with \({S}_{i}^{-1}\) the inverse of the Cholesky factored matrix. The new stiffness matrix \(\stackrel{̃}{K}\) verifies the support of the XFEM node locally:

\({\stackrel{̃}{K}}_{\mathit{loc}}^{i}={\left({P}_{c}^{i}\right)}^{T}{K}_{\mathit{loc}}^{i}{P}_{c}^{i}={\left({S}_{i}^{-1}\right)}^{T}{K}_{\mathit{loc}}^{i}{S}_{i}^{-1}={\left({S}_{i}^{-1}\right)}^{T}\left({S}_{i}^{T}{S}_{i}\right){S}_{i}^{-1}={I}_{d}\) eq 3.2.6.3-9

\({I}_{d}\) refers to the identity matrix. \({I}_{d}\) therefore respects the orthogonality condition of the equation.

In practice, it is necessary to scale the new \({\stackrel{̃}{K}}_{\mathit{loc}}^{i}\) matrix with the rest of the stiffness matrix. The following preconditioner is therefore preferred to the preconditioner:

\({P}_{c}^{i}=\sqrt{\mathit{scal}}\times {S}_{i}^{-1}\) eq 3.2.6.3-10

where \(\mathit{scal}\) is a scaling coefficient such as: \(\mathit{scal}=\frac{\mathit{max}(∣\mathit{diag}(K)∣)+\mathit{min}(∣\mathit{diag}(K)∣)}{2}\)

Consequently, the new pre-conditioned local matrix complying with the orthogonality condition is:

\({\stackrel{̃}{K}}_{\mathit{loc}}^{i}={\left({P}_{c}^{i}\right)}^{T}{K}_{\mathit{loc}}^{i}{P}_{c}^{i}=\mathit{scal}\times {I}_{d}\) eq 3.2.6.3-11

Moreover, the use of a Cholesky factorization is risky if the conditioning of the local stiffness matrix \({K}_{\mathit{loc}}^{i}\) deteriorates. In case of failure of the Cholesky factorization, we orthogonalize the local matrix using a SVD (decomposition into singular values):

\({K}_{\mathit{loc}}^{i}={U}_{i}{D}_{i}{U}_{i}^{T}\) eq 3.2.6.3-12

where \({U}_{i}\) is an orthogonal matrix and \({D}_{i}\) is a diagonal matrix with strictly positive values.

The following choice of preconditioner is then made alternately:

\({P}_{c}^{i}=\sqrt{\mathit{scal}}\times {U}_{i}\sqrt{{D}_{i}^{-1}}\) eq 3.2.6.3-13

It is easy to verify that this choice makes it possible to respect the orthogonality condition:

\({\stackrel{̃}{K}}_{\mathit{loc}}^{i}={\left({P}_{c}^{i}\right)}^{T}{K}_{\mathit{loc}}^{i}{P}_{c}^{i}={\left(\sqrt{\mathit{scal}}\times {U}_{i}\sqrt{{D}_{i}^{-1}}\right)}^{T}\left({U}_{i}{D}_{i}{U}_{i}^{T}\right)\left(\sqrt{\mathit{scal}}\times {U}_{i}\sqrt{{D}_{i}^{-1}}\right)=\mathit{scal}\times {I}_{d}\)

In summary, the construction of the Béchet preconditioner [bib 28] involves 4 steps:

the extraction of local matrices \({K}_{\mathit{loc}}^{i}\) in the stiffness matrix (i.e. block matrices associated with the ddls carried by the nodes XFEM no. \(i\)).

the calculation of local pre-conditioning matrices \({P}_{c}^{i}\) (cf. equation or),

assembling the pre-conditioner (cf. equation),

finally, the transformation of the matrix system (cf. equations and).

Note:

Note that the transformation of the matrix system presents a significant computer difficulty in the code_aster, given the complexity of the data structure for storing matrices (cf. [D4.06.10] and [D4.06.07]).

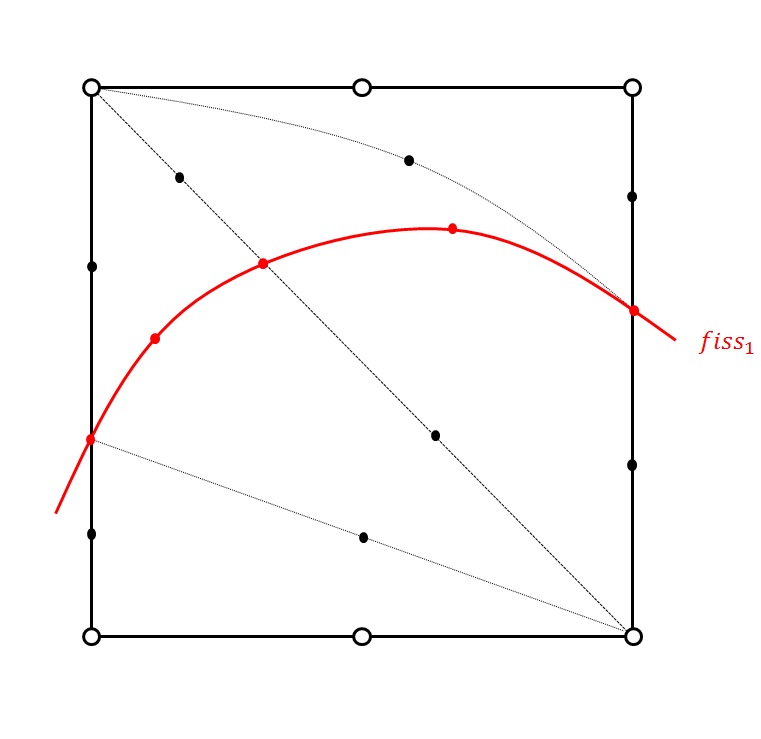

3.3. Sub-division#

Particular attention must be paid when numerically integrating the terms of stiffness and the second member of an element crossed by the crack. In fact, on an element crossed by a crack, the displacement gradients may be discontinuous, and in this case the numerical integration of Gauss-Legendre over the whole of the element is not applicable. In order to return to conventional conditions of regularity, integration should be carried out in areas where the integrand is at least continuous. For an element crossed by a crack, it is therefore necessary to integrate separately on both sides of the crack (this appears for the first time in [bib 24] for 2D and in [bib 32] for 3D). Several procedures are possible, and easily implemented in 2D. The difficulties appear with 3D.

An attempt is made to sub-cut into sub-tetrahedra any volume element (tetrahedron, pentahedron, hexahedron) cut by a surface. It should be noted that this cut is only used for integration purposes, it is purely virtual and no node is added to the mesh. The mesh is not changed in any way.

For pentahedra and hexahedra, the number of possibilities being too large and the configurations too complicated, it is preferable to reduce ourselves to the cutting of tetrahedra. In this way, the large number of cutting possibilities is « condensed » into a few configurations. In other words, we are formulating the « clustering » of a large set (number of intersection configurations) to a small set (reduced number of cutting configurations in a tetrahedron).

In fact, the « condensation » algorithm builds a bijective application, from the parent polyhedron, to some reference cutting configurations. This application can be broken down into two bijective operations:

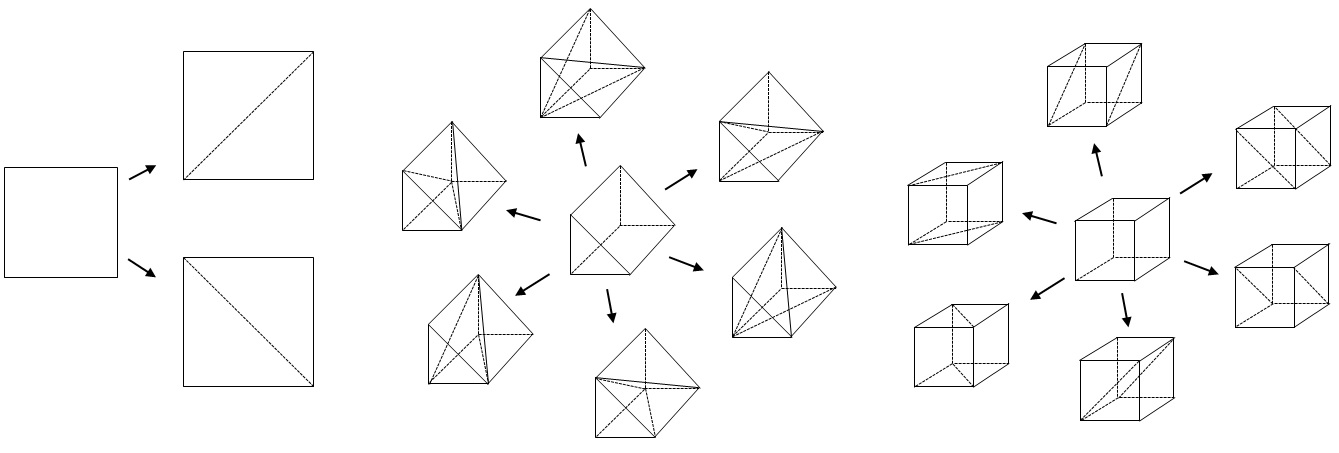

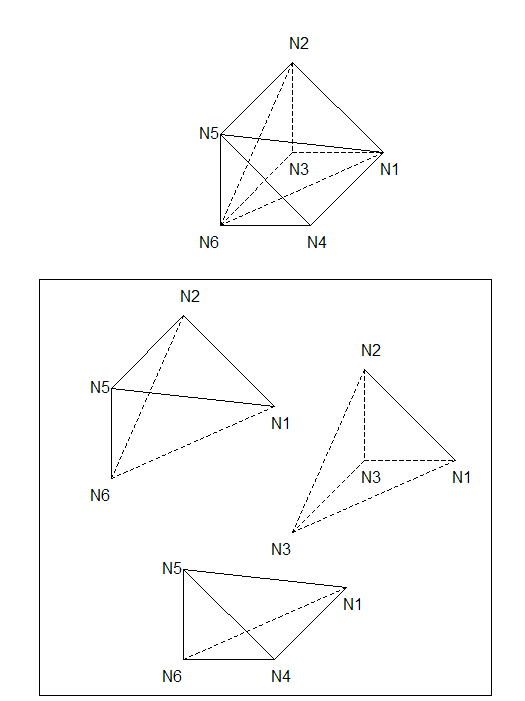

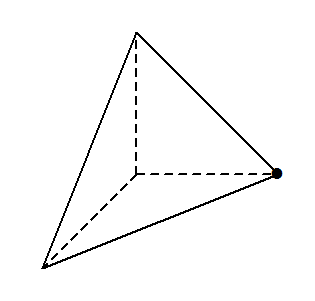

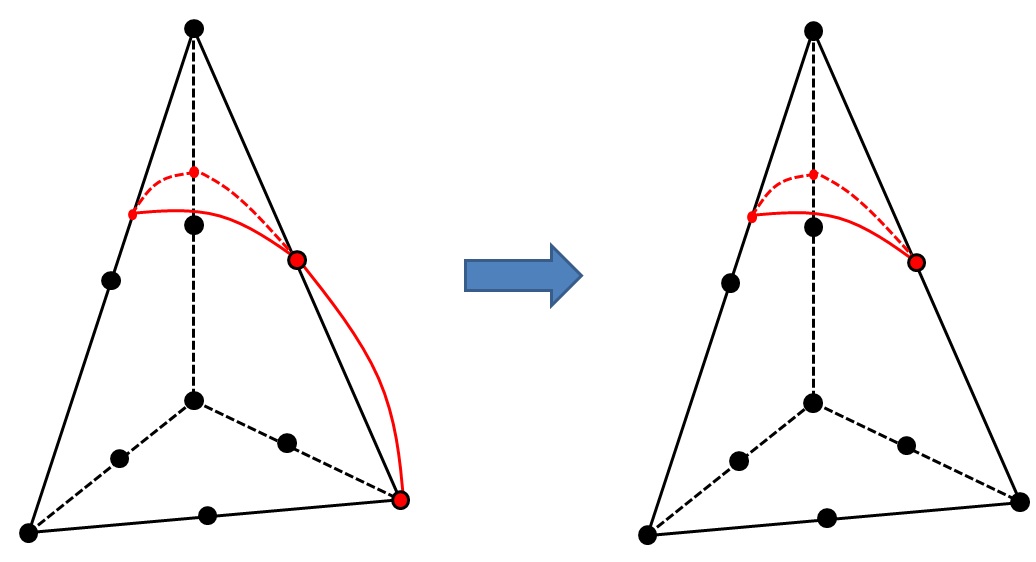

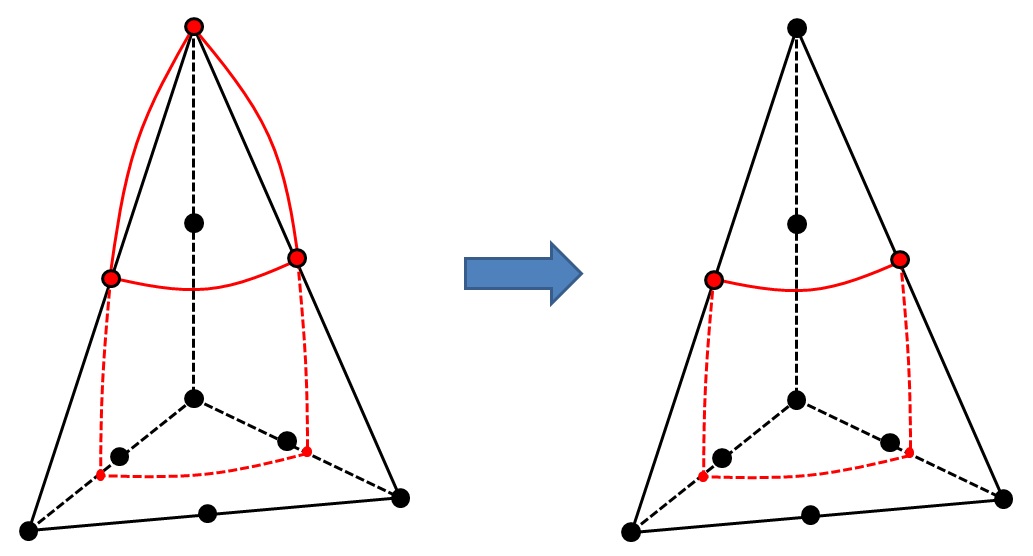

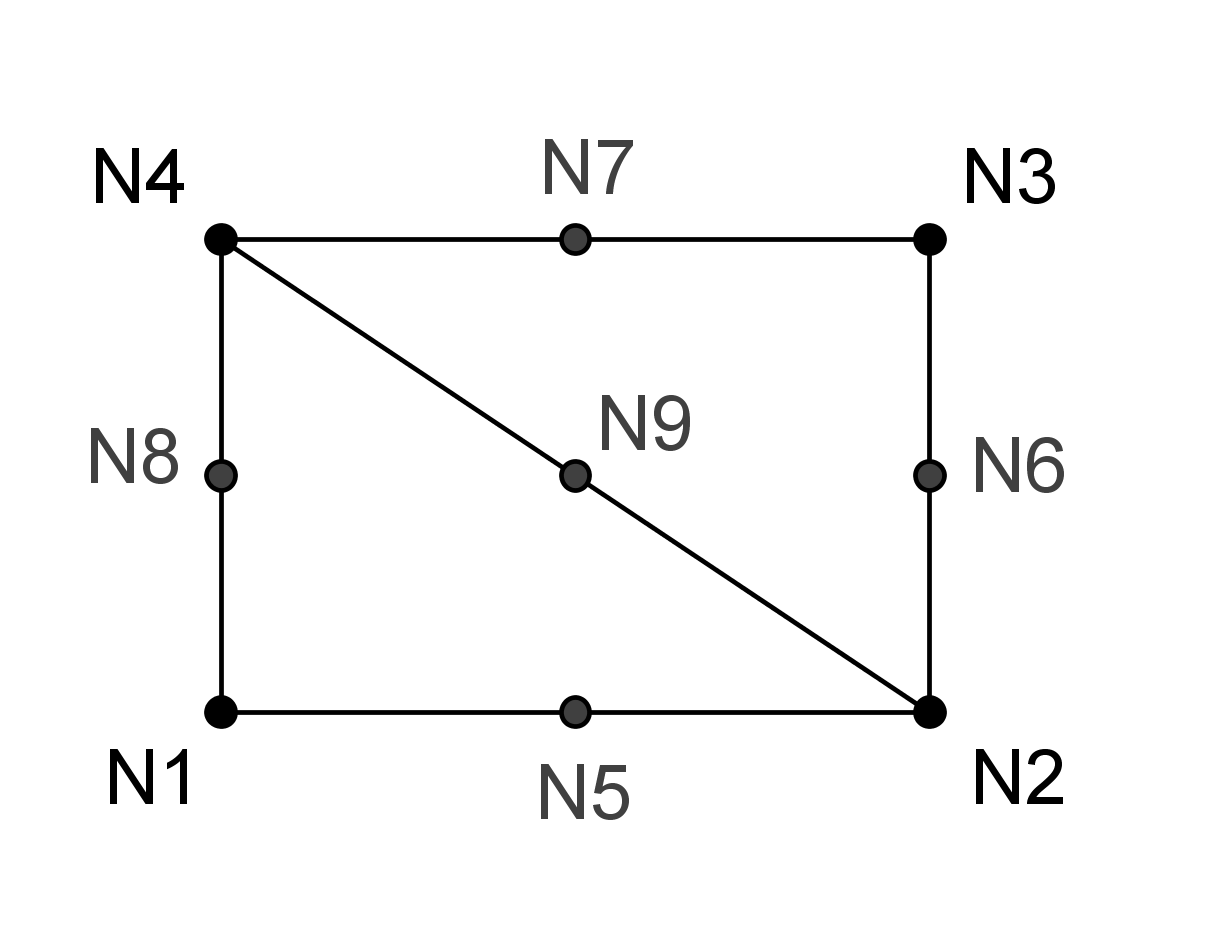

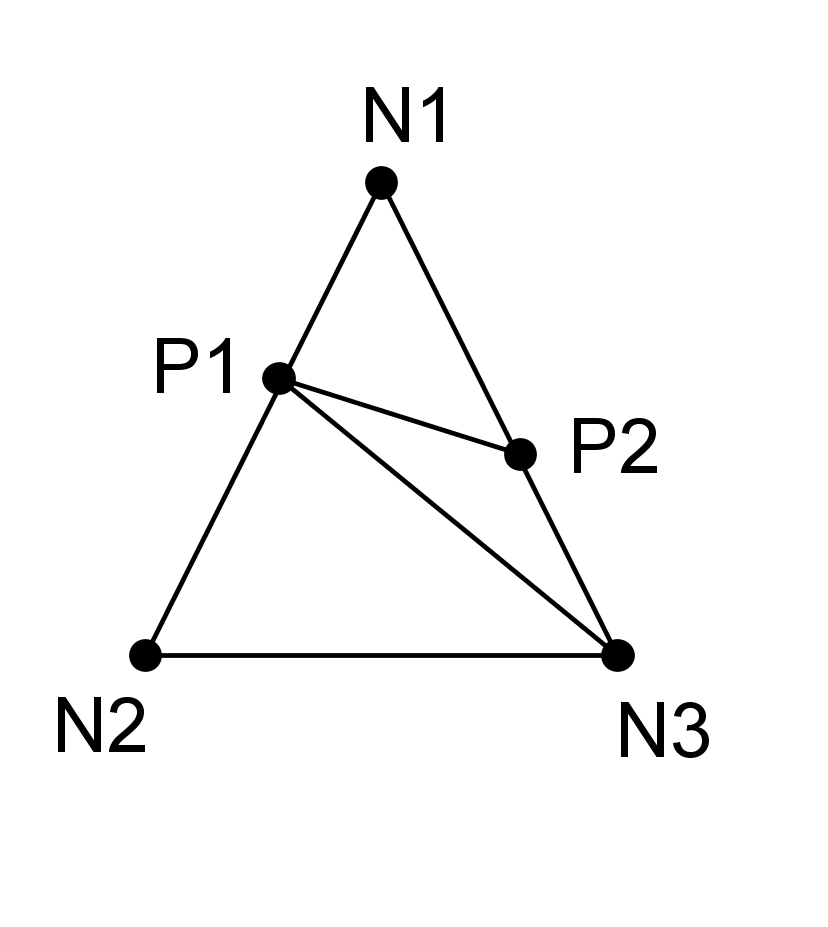

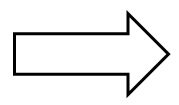

1 — An (explicit) bijection towards the cutting of a tetrahedron: We therefore carry out a preliminary phase which consists in systematically cutting quadrangles, pyramids, pentahedra and hexahedra into tetrahedra. This bijection is not unique. As can be seen on the, there are two bijections to divide a quadrangle into two triangles (the same for a pyramid), 6 bijections to divide a pentahedron into 3 tetrahedra and 6 bijections to divide a hexahedron into 2 pentahedra which are then divided into tetrahedra. In the case of the hexahedron, each cutting configuration is thus obtained twice. The maximum number of distinct bijections to divide a hexahedron into 6 tetrahedra is therefore \(\frac{{6}^{3}}{2}=108\).

Figure 3.3-1 : Dividing a quadrangle into two triangles (left), dividing a pentahedron into 3 tetrahedra (middle), and dividing a hexahedron into 2 pentahedra (right)

In § 3.3.1 to § 3.3.3, we present the bijection selected by default for the division of non-simplicial elements into tetrahedra (or triangles for the 2D case).

This is a bijection, because we build an (invertible) application that associates the relative numbering of the vertex nodes in a subtetrahedron with the absolute numbering of the nodes in the mesh. The direct application is stored in the connectivity table (see [D4.10.02]: data structures XFEM).

2 — An (implicit) bijection to a reference cutting configuration: on the one hand, we identify the reference cutting configuration corresponding to the tetrahedron to be cut, on the other hand, we « geometrically » return the tetrahedron, to « superimpose » it on this reference cutting configuration (for example). Clearly, the nodes of the subtetrahedron are identified with the nodes of the reference cutting element (this is the direct application of bijection). The multiple cutting possibilities, linked to the different possibilities of ordering the edges and the points of intersection, are then « condensed ».

Subsequently, this operation facilitates the scheduling of the middle nodes of the quadratic subtetrahedra for computer storage. In fact, the ordering of the new middle nodes no longer depends on the faces or edges where they are calculated, but on a single reference cut configuration. Consequently, the new calculated middle nodes are necessarily ordered in the same way, in the reference cut subtetrahedron. Then, these middle nodes are positioned on the edges of the tetrahedron by the reverse application (from the identification of the nodes above). The bijection used is therefore tacit.

The procedures for scheduling and calculating midpoints are explained below.

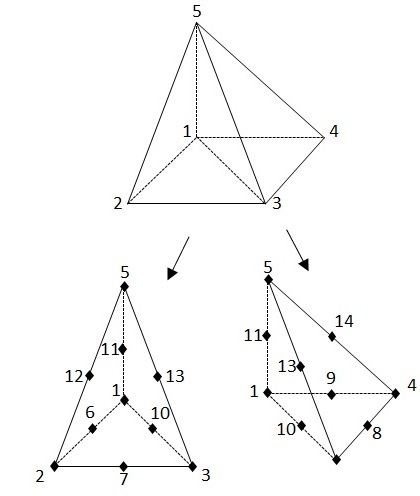

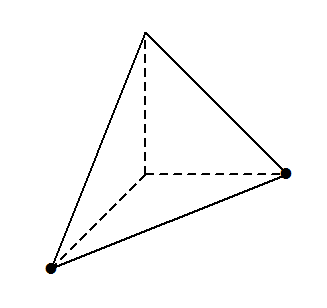

3.3.1. Preliminary phase of cutting hexahedra to reduce to tetrahedra#

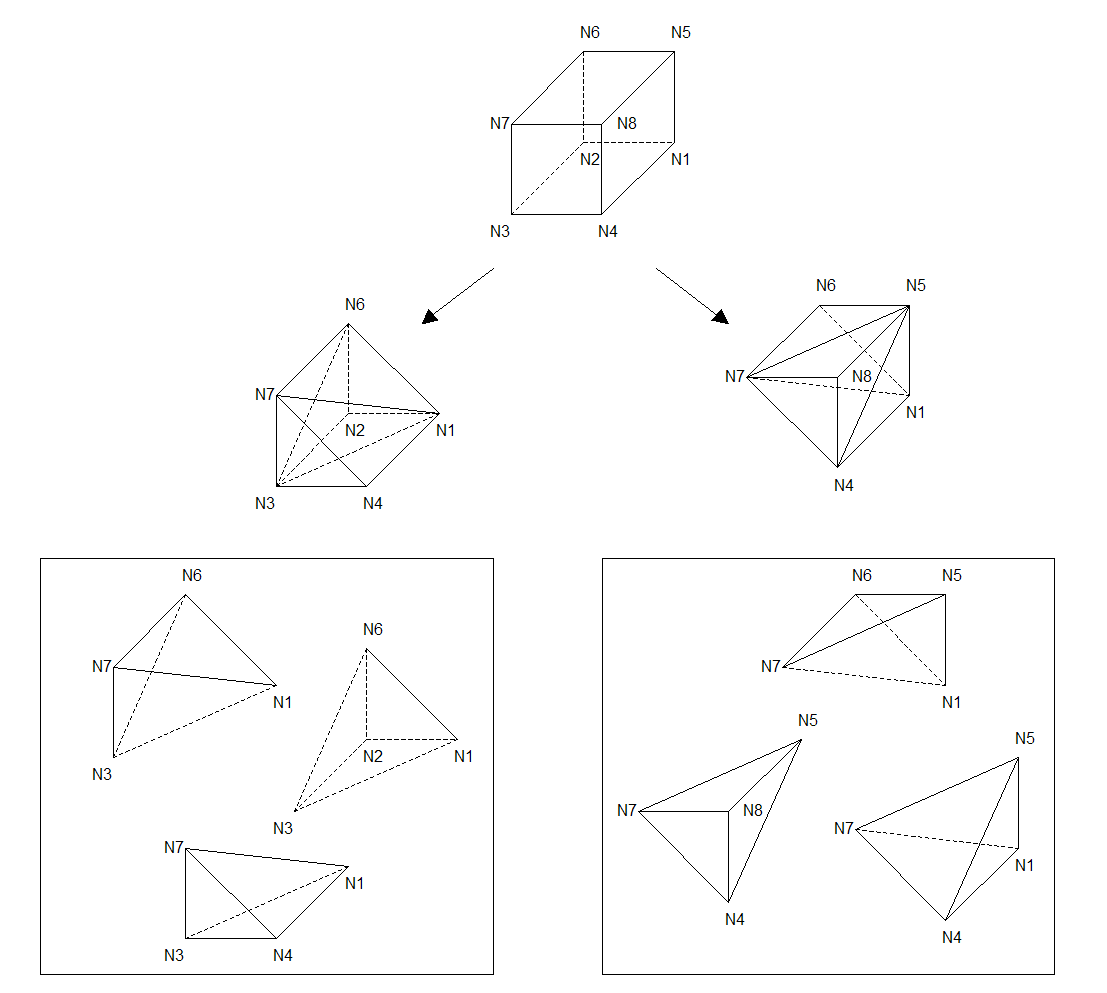

Figure 3.3.1-1 : Division of a hex ahedron (hexa8) into tetrahedra

A hexahedron is then divided into 6 tetrahedra, listed in the following table:

hexahedron |

tetrahedron |

\(\mathit{N1}\mathit{N2}\mathit{N3}\mathit{N4}\mathit{N5}\mathit{N6}\mathit{N7}\mathit{N8}\) |

\(\mathit{N7}\mathit{N4}\mathit{N3}\mathit{N1}\) |

\(\mathit{N1}\mathit{N6}\mathit{N2}\mathit{N3}\) |

|

\(\mathit{N3}\mathit{N6}\mathit{N7}\mathit{N1}\) |

|

\(\mathit{N6}\mathit{N1}\mathit{N5}\mathit{N7}\) |

|

\(\mathit{N4}\mathit{N7}\mathit{N8}\mathit{N5}\) |

|

\(\mathit{N4}\mathit{N5}\mathit{N1}\mathit{N7}\) |

Table 3.3.1-1 : Division of a hexahedron into tetraheders

Note that this division is the choice made by default but we could have chosen another way of cutting a hexahedron into 2 pentahedra as well as another way of cutting a pentahedron into 3 tetrahedra.

If the element is quadratic (HEXA20, PENTA15,…), the same subdivision into tetrahedra is used, as explained above.

In addition, the middle nodes on the new edges generated by the cut must be taken into account: these middle nodes coincide with the middle nodes of the complete element. We therefore virtually add to the element, the middle nodes necessary to find the complete element of.

Figure 3.3.1-2 : Division of a quadratic hexahedron (hexa 20) via the complete element (hexa 27)

3.3.2. Preliminary phase of cutting pentahedra to reduce to tetrahedra#

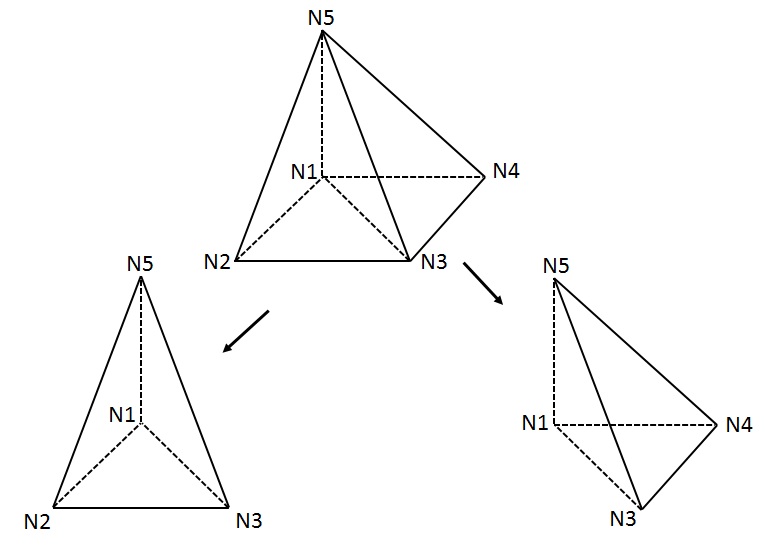

Figure 3.3.2-1 : Diagram for dividing a pentahedron into tetraheders

A pentahedron is then divided into 3 tetrahedra, listed in the following table:

pentahedron |

tetrahedron |

\(N1N2N3N4N5N6\) |

\(N5N4N6N1\) |

\(N1N2N3N6\) |

|

\(N6N2N5N1\) |

Table 3.3.2-1 : Division of a pentahedron into tetraheders

For a quadratic pentahedron (penta15) the above subdivision is retained.

In addition, the middle nodes on the new edges generated by the cut must be taken into account: these middle nodes coincide with the middle nodes of the complete element. We therefore add to the element, the middle nodes necessary to find the complete element.

Figure 3.3.2-2: Division of a quadratic pentahedron

3.3.3. Preliminary phase of cutting the pyramids to be reduced to tetrahedra#

Figure 3.3.3-1 : Diagram of dividing a pyramid into tetraheders

A pyramid is then divided into 2 tetrahedra, listed in the following table:

pyramid |

tetrahedron |

\(N1N2N3N4N5\) |

\(N1N3N4N5\) |

\(N1N2N3N5\) |

Table 3.3.3-1 : Dividing a pyramid into tetraheders

For a quadratic pyramid (pyra13) the above subdivision is retained.

In addition, the middle nodes on the new edges generated by the cut must be taken into account: these middle nodes coincide with the middle nodes of the complete element. We therefore add to the element, the middle nodes necessary to find the complete element.

Figure 3.3.3-2: Division of a quadratic pyramid

Note:

L the subdivision of a quadrangle e into two triangles is similar to the subdivision of a pyramid into two tetrahedra.

3.3.4. Sub-division of a tetrahedron into sub-tetrahedra#

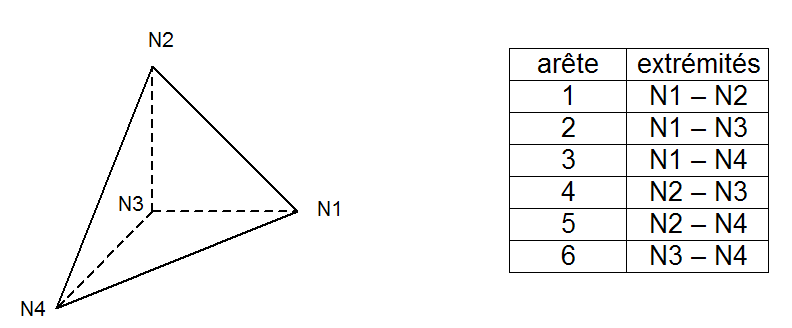

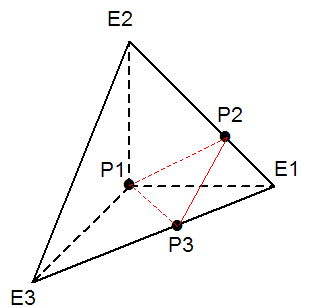

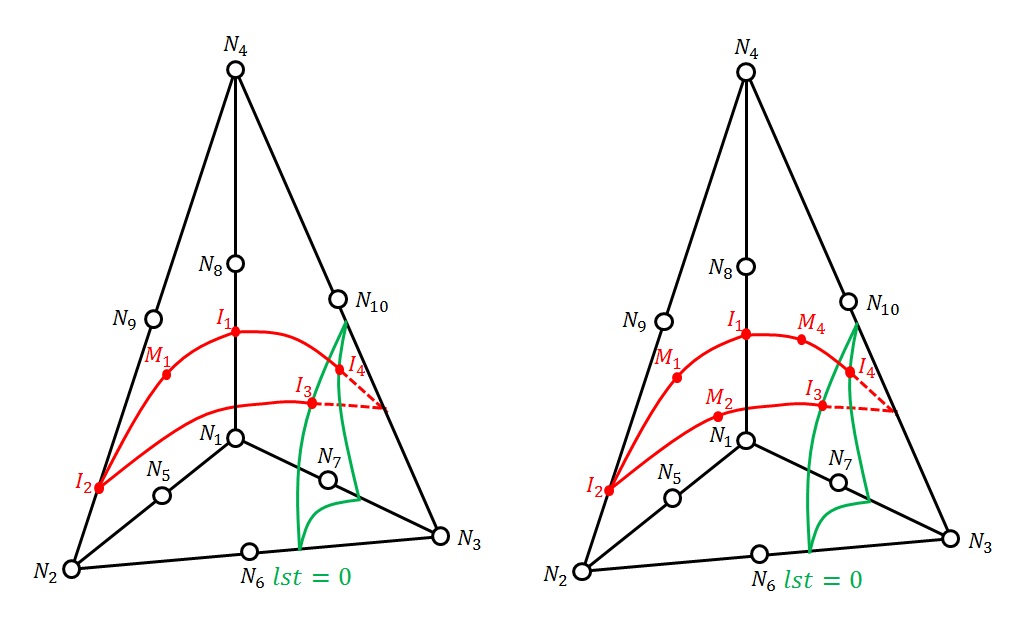

The reference tetrahedron is set to Figure 3.3.4-1. The intersection points \(\mathit{Pi}\) between the surface \({\mathrm{lsn}}^{h}=0\) and the edges of the tetrahedron are determined.

Let \(n\) be the number of intersection points \(\mathit{Pi}\).

At each intersection point \(\mathit{Pi}\), we associate two integers: \(\mathit{Ai}\) and \(\mathit{NSi}\)

\(\mathit{Ai}\) is the number of the edge where \(\mathit{Pi}\) is located (for example if \(\mathit{Pi}\) is on the end edge \(\mathit{N2}\mathrm{-}\mathit{N3}\), then \(\mathit{Ai}\mathrm{=}4\)). In the case where \(\mathit{Pi}\) coincides with a vertex node of the tetrahedron, Ai is equal to 0,

\(\mathit{NSi}\) is the number of the vertex node in the case where \(\mathit{Pi}\) coincides with a vertex node of the tetrahedron (for example if \(\mathit{Pi}\) coincides with \(\mathit{N3}\), then \(\mathit{NSi}\mathrm{=}3\)). In the case where \(\mathit{Pi}\) is on an edge, \(\mathit{NS}\) is equal to 0.

Note:

The product of \(\mathit{Ai}\) by \(\mathit{NSi}\) is always 0.

The intersection points are then sorted in ascending order of \(\mathit{Ai}\). The intersection points coinciding with vertex nodes will therefore be found at the beginning of the list.

Figure 3.3.4-1 : Reference tetrahedron

Since the approximation of the level set uses the shape functions of the tetrahedron, the surface \({\mathit{lsn}}^{h}=0\) is then a plane with linear elements, and a surface with possibly curved geometry, with quadratic elements.

The problem therefore comes down to the cutting of a tetrahedron by a surface. Let’s look at the different possible cases depending on the value of \(n\) (number of intersection points \(\mathit{Pi}\)). We can already eliminate the trivial cases where no sub-division is necessary:

when \(n<3\) the surface trace in the tetrahedron is a vertex or an edge. If surface \({\mathit{lsn}}^{h}=0\) is not a plane, the geometry of the level-set is then approximated by the edge of the tetrahedron.

when \(n\mathrm{=}3\) and the 3 points of intersection are vertex points, the trace of the surface in the tetrahedron is one side of the tetrahedron. If surface \({\mathrm{lsn}}^{h}=0\) is not a plane, the geometry of the level-set is then approximated by the face of the tetrahedron.

In these two cases, a single subtetra is obtained, which corresponds to the tetrahedron.

Figure 3.3.4-2 : Case without undercut

\(\mathit{P1}\) and \(\mathit{P2}\) are necessarily the two summit points (so \(\mathit{A1}\mathrm{=}\mathit{A2}\mathrm{=}0\)). Based on \(\mathit{A3}\), the edge number corresponding to \(\mathit{P3}\), we can determine the 2 ends of this edge, that is, \(\mathit{E1}\) and \(\mathit{E2}\).

Figure 3.3.4.1-1 : General case where \(n\mathrm{=}3\) including 2 vertex points

One subtetrahedron is obtained on each side of the interface, i.e. in the end 2 subtetrahedra.

2 subtetrahedra |

\(\mathit{P1}\mathit{P2}\mathit{P3}\mathit{E1}\) + \(\mathit{P1}\mathit{P2}\mathit{P3}\mathit{E2}\) |

Table 3.3.4.1-1 : Sub-tetraheders

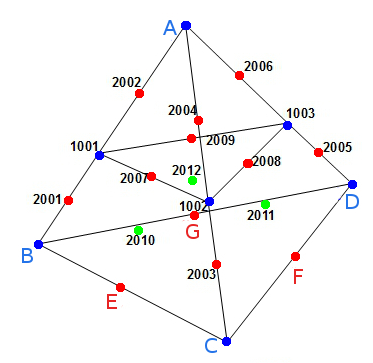

To reduce the cutting possibilities, (since there are at least 6 cutting possibilities taking into account the 6 edges in the subtetrahedron) a bijection towards a unique cutting element is constructed. The nodes \(A\), \(B\), \(C\),,, \(D\),,,,,,, \(E\),,, \(F\), \(G\), \(H\) define the unknowns of sequencing of the subtetrahedron cut. Scheduling unknowns will be uniquely associated with the nodes of the parent element. This association constructs a (implicit) bijection.

Recall that,

scheduling is important from a computer point of view, to ensure the storage of the virtual under-cut mesh. In particular, to locate intersection points and midpoints in data structures XFEM,

the nodes with a number between 1000 and 1999 represent the intersection points, these nodes are calculated with the procedure described in §4.2.3 of [D4.10.02].

the nodes with a number greater than 2000 represent the new middle nodes resulting from the cut, these nodes are calculated with the procedure described in §4.2.3 of [D4.10.02]

Note:

For this particular cutting configuration, the construction of the bijection is not**entirely deterministic. In fact, the 2 sub-tetrahedra are symmetric with respect to the cutting plane. From a topological point of view, the unknowns {\(A`*, * ** * ** * ** * **, *:math:`D`*, * *,* *,* :math:`E\) }} are symmetric to the unknowns { \(B\) , \(F\) * , ,, * , , \(G\) }. To make the cutting of a given mesh deterministic, we chose the following arbitrary convention: taking \(A\) as the first node on the edge of the first intersection point.

By analogy to molecular configurations, cutting will potentially generate two configurations called « enantiomers » .

Figure 3.3.4.1-2: cutting element for the configuration 4 intersection points including 2 vertex nodes

\(P1\) is necessarily the summit point (so \(A1=0\) and \(A2\ne 0\)). Edges \(A2\) and \(A3\) have one node in common, \(E1\), and 2 different nodes \(E2\) and \(E3\).

Figure 3.3.4.2-1 : General case where \(n=3\) including a summit point

We obtain a subtetrahedron on one side, and on the other a pyramid that is divided into 2 subtetrahedra, or in the end 3 subtetrahedra.

3 subtetrahedra |

\(\mathit{P1}\mathit{P2}\mathit{P3}\mathit{E1}\) + \(\mathit{P1}\mathit{P2}\mathit{P3}\mathit{E3}\) + \(\mathit{P1}\mathit{P2}\mathit{E2}\mathit{E3}\) |

Table 3.3.4.2-1 : Sub-tetraheders

To reduce the possibilities of cutting, a bijection is constructed to a unique cutting element. Nodes \(A\), \(B\), \(C\), \(E\),, \(F\),,, \(G\), \(H\) define the sequencing unknowns of subtetrahedron cutting. Scheduling unknowns will be uniquely associated with the nodes of the parent element. This association constructs a (implicit) bijection.

Figure 3.3.4.2-2: cutting element for the configuration 3 intersection points including 1 vertex node

This cutting case corresponds to a flat configuration. This is a particular case of the previous cut for which surface \(\mathit{lsn}=0\) also passes through another vertex node. The cut is then strictly identical to the previous cut (Paragraph [§ 3.3.4.2]). This cutting configuration is represented with on the right the configuration with 3 intersection points and a vertex point to which we refer to*.*

Figure 3.3.4.3-1: Grazing configuration with 5 intersection points (left) and corresponding healthy configuration (right)

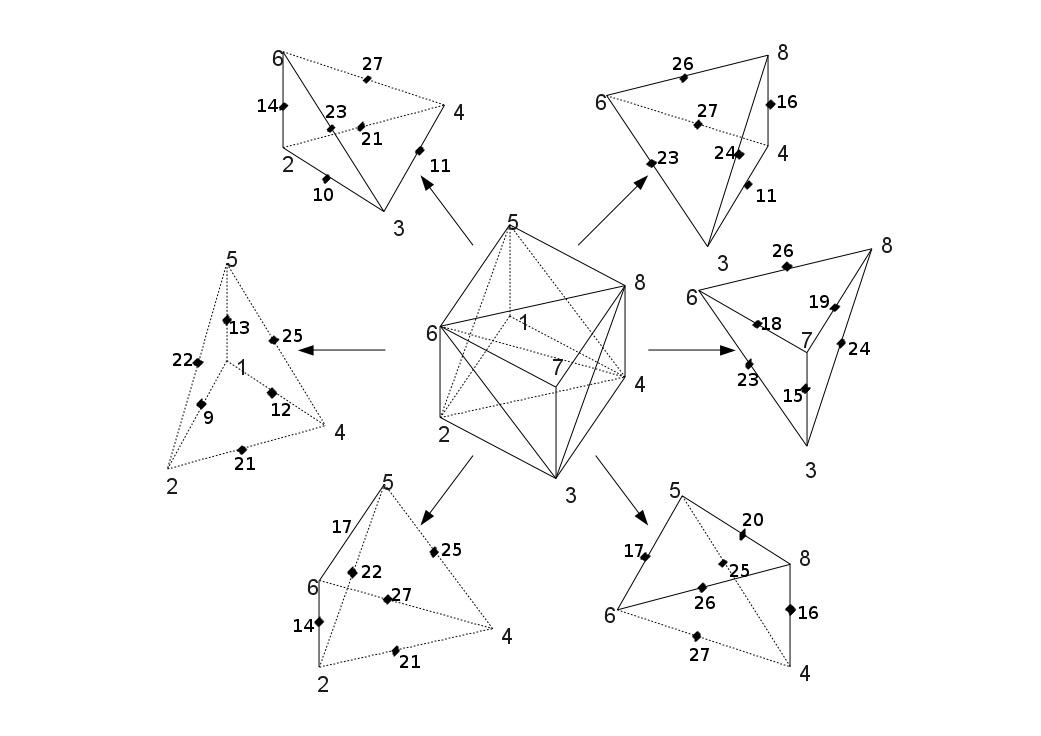

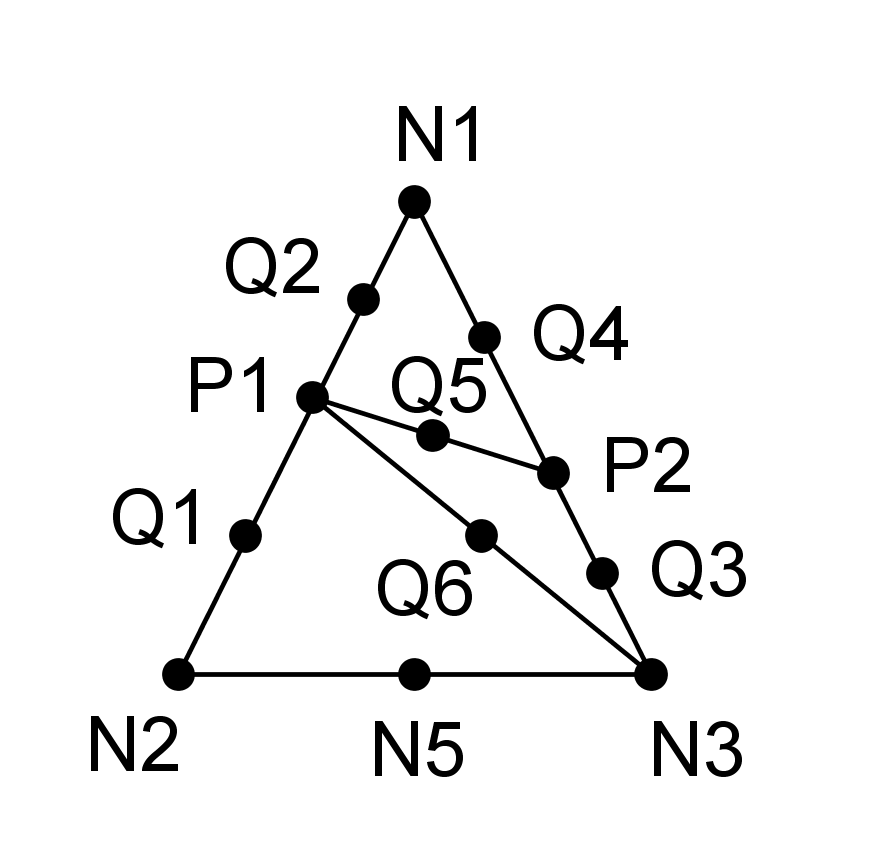

There are at least 4 cutting options, listed Figure 3.3.4.4-1. These various possibilities are not treated on a case-by-case basis.

A bijection is constructed to a unique cutting element. Nodes \(A\), \(B\), \(C\), \(D\),, \(E\),,, \(F\), \(G\) define the sequencing unknowns of subtetrahedron cutting. Scheduling unknowns will be uniquely associated with the nodes of the parent element. This association constructs a (implicit) bijection.

Example of identification procedure:

In each of the configurations listed Figure 3.3.4.4-1 , we associate the scheduling unknowns n œ*uds* \(\text{{}A,B,C,D\text{}}\) with the n œ*uds* \(\text{{}{N}_{\mathrm{1,}}{N}_{\mathrm{2,}}{N}_{\mathrm{3,}}{N}_{4}\text{}}\) of the sub-tetrahedron. As the node \(A\) belongs to the three cut edges, it is easily identified with the corresponding node in each of the configurations listed. Then, we then identify \(\text{{}B,C,D\text{}}\) since the n œ*uds* \(B\) , \(C\) , \(D\) belong respectively to the first, second, third intersected edge, and are different from the node \(A\) previously identified. The result is the correspondence table

This identification procedure makes it possible to construct a bijection implicitly.

Figure 3.3.4.4-1: Configurations of a tetrahedron with 3 intersecting edges

Figure 3.3.4.4-2: cutting element for the configuration with 3 intersection points and no vertex nodes

From to |

|

\((A,B,C,D)\) |

\(({N}_{\mathrm{1,}}{N}_{\mathrm{2,}}{N}_{\mathrm{3,}}{N}_{4})\) |

\((A,B,C,D)\) |

\(({N}_{\mathrm{2,}}{N}_{\mathrm{1,}}{N}_{\mathrm{3,}}{N}_{4})\) |

\((A,B,C,D)\) |

\(({N}_{\mathrm{3,}}{N}_{\mathrm{1,}}{N}_{\mathrm{2,}}{N}_{4})\) |

\((A,B,C,D)\) |

\(({N}_{\mathrm{4,}}{N}_{\mathrm{1,}}{N}_{\mathrm{2,}}{N}_{3})\) |

Table 3.3.4.4-1: Identifying the nodes of the cutting element to the nodes of the subtetrahedron in each configuration

This cutting case corresponds to a flat configuration. This is a particular case of the previous cut for which surface \(\mathit{lsn}=0\) also passes through one of the vertex nodes. The cut is then strictly identical to the previous cut (Paragraph [§ 3.3.4.4] ). This cutting configuration is represented with, on the right, the configuration with 3 intersection points to which we refer.

Figure 3.3.4.5-1: Grazing configuration with 4 intersection points (left) and corresponding healthy configuration (right)

This cutting case corresponds to a flat configuration. This is another particular case of the previous cut for which surface \(\mathit{lsn}=0\) also passes through two of the vertex nodes. The cut is then strictly identical to the previous cut (Paragraph [§ 3.3.4.4]). This cutting configuration is represented with, on the right, the configuration with 3 points of intersection to which we refer to*.*

Figure 3.3.4.6-1: Grazing configuration with 6 intersection points (left) and corresponding healthy configuration (right)

There are at least 3 cutting options, listed. These various possibilities are not treated on a case-by-case basis.

A bijection is constructed to a unique cutting element. Nodes \(A\), \(B\), \(C\), \(D\),, \(E\), \(F\), define the sequencing unknowns of subtetrahedron cutting. Scheduling unknowns will be uniquely associated with the nodes of the parent element. This association constructs a (implicit) bijection.

Figure 3.3.4.7-1: Configurations of a tetrahedron with 4 intersecting edges

Figure 3.3.4.7-2: cutting element for the configuration with 4 intersection points and no vertex nodes

This cutting case corresponds to a flat configuration. This is a particular case of the previous cut for which surface \(\mathit{lsn}=0\) also passes through one of the vertex nodes. The cut is then strictly identical to the previous cut (Paragraph [§ 3.3.4.7]). This cutting configuration is represented with, on the right, the configuration with 4 intersection points to which we refer.

Figure 3.3.4.8-1: Grazing configuration with 5 intersection points (left) and corresponding healthy configuration (right)

This cutting configuration corresponds to a flat configuration. It does not boil down to any configuration previously described. It can be distinguished from the configuration described in Paragraph [§ 3.3.4.5]) (which also includes 4 intersection points including a vertex point) because the vertex node not intersected on the grazing edge belongs to only two intersected edges (instead of 3 in the case described in Paragraph [§ 3.3.4.5]). There are at least 24 cutting options, 3 of which are listed in the. These various possibilities are not treated on a case-by-case basis.

Figure 3.3.4.9-1: Configurations of a tetrahedron with 4 intersection points including a vertex point

A bijection is constructed to a unique cutting element. Nodes \(A\), \(B\), \(C\), \(D\),, \(E\), \(F\), define the sequencing unknowns of subtetrahedron cutting. Scheduling unknowns will be uniquely associated with the nodes of the parent element. This association constructs a (implicit) bijection. This cutting configuration gives rise to 5 child subtetrahedra.

Figure 3.3.4.9-2: cutting element for the configuration with 4 intersection points including a vertex node

3.3.5. Multi-die-cutting#

When you want to model junctions, intersections or simply that two cracks are close enough to cut the same element, you must be able to divide the element into domains that respect all the discontinuities introduced.

The strategy adopted consists in cutting the element several times sequentially. This is a strategy that has the merit of being fairly quick to implement, because the cutting of a reference tetrahedron by a crack generates sub-tetrahedra which can in turn be considered as reference tetrahedra for cutting by the next crack.

The problem is that we do not optimize the total number of sub-elements generated, which may be very high if we recut more than 3 times in 3D. To solve this problem, it would be necessary to be able to directly cut any type of element (hexahedron, pentahedron, pyramid, tetrahedron) in combination of all these types of elements. This requires fairly heavy developments and is therefore not an option. Another strategy would be to group sub-items by zone and find the optimal number of Gauss points (and weights) per zone. We would no longer store the sub-elements but directly the areas with the associated gauss points.

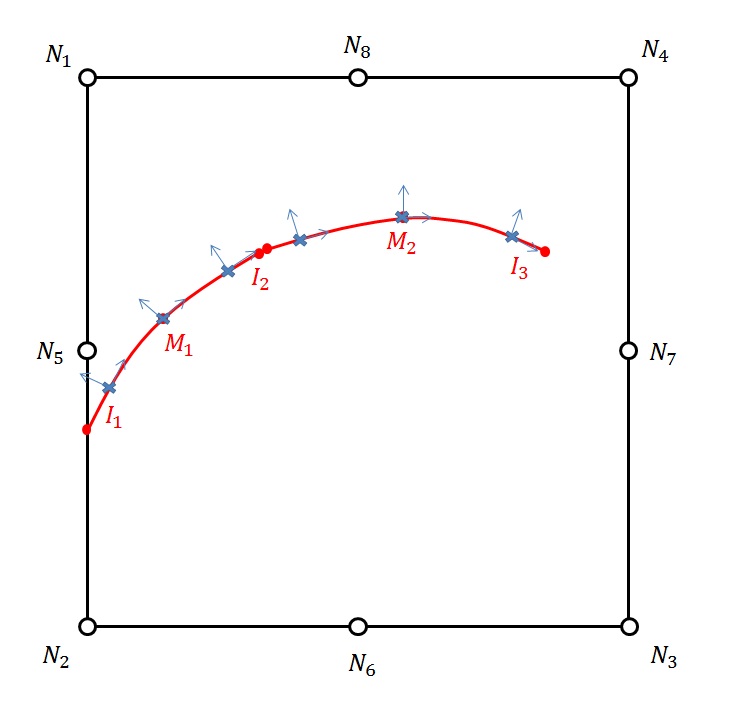

Figure 3.3.5-1: Example of multi-division in 2D

3.3.6. Maximum number of sub-items#

In order to correctly size the data structures relating to the sub-division, it is necessary to determine the maximum number of sub-elements generated by the sub-division phase, according to the type of the initial mesh.

In this paragraph, it is considered that the element is cut by only one crack.

The case with the largest number of sub-elements is the one described in paragraph [§ 3.3.4.7], which results in 6 sub-elements.

A pentahedron being previously divided into three tetrahedra, one might think that the largest number of sub-elements generated is where each of these three tetrahedra is in turn sub-divided into 6 sub-elements; which would result in a final division into 18 sub-elements. This would lead to a final division into 18 sub-elements. However, such a scenario is impossible. At most, out of the three tetrahedra, two will be divided into 6 sub-elements and only one will be divided into 4 sub-elements, resulting in 16 sub-elements.

As before, the case where all the six tetrahedra are each sub-divided into 6 sub-elements is impossible. The maximum case is the one that corresponds to the case mentioned in the previous paragraph: the two pentahedra deduced from the hexahedron (see Figure 3.3.1-1) are each sub-divided into 16 sub-elements. So the number of sub-elements is \((6+6+4)+(6+6+4)=32\).

A finite element XFEM can be cut by a maximum of 4 cracks in codeaster. The maximum number of sub-elements generated is then difficult to evaluate and an increase in the maximum number of sub-elements would be too high. In fact, if we take the maximum number of sub-elements generated by a single crack, i.e. 32, and if we consider that the 32 tetrahedra can give rise to 6 tetrahedra for each of the following cracks, we obtain \(32\ast \mathrm{6³}=6912\). This number of integration sub-elements for a hexahedron is completely unattainable because it goes without saying that the tetrahedra resulting from the first division will not all be cut by the following cracks, but it is difficult to show a better major component. The choice is made to set the maximum number of sub-elements for multi-cracked elements to 4 times the maximum number of sub-elements for a single crack, i.e. \(32\ast 4=128\) in 3D and \(4\ast 6=24\) in 2D. These numbers can easily be exceeded for elements cut by 3 or 4 cracks. In this case, the cutting procedure returns an error and the user is invited to refine the mesh used in order to avoid that finite elements are streaked with cracks, which would also lead to major packaging problems (see paragraph [§ 3.2.6]).

3.3.7. 2D subdivision#

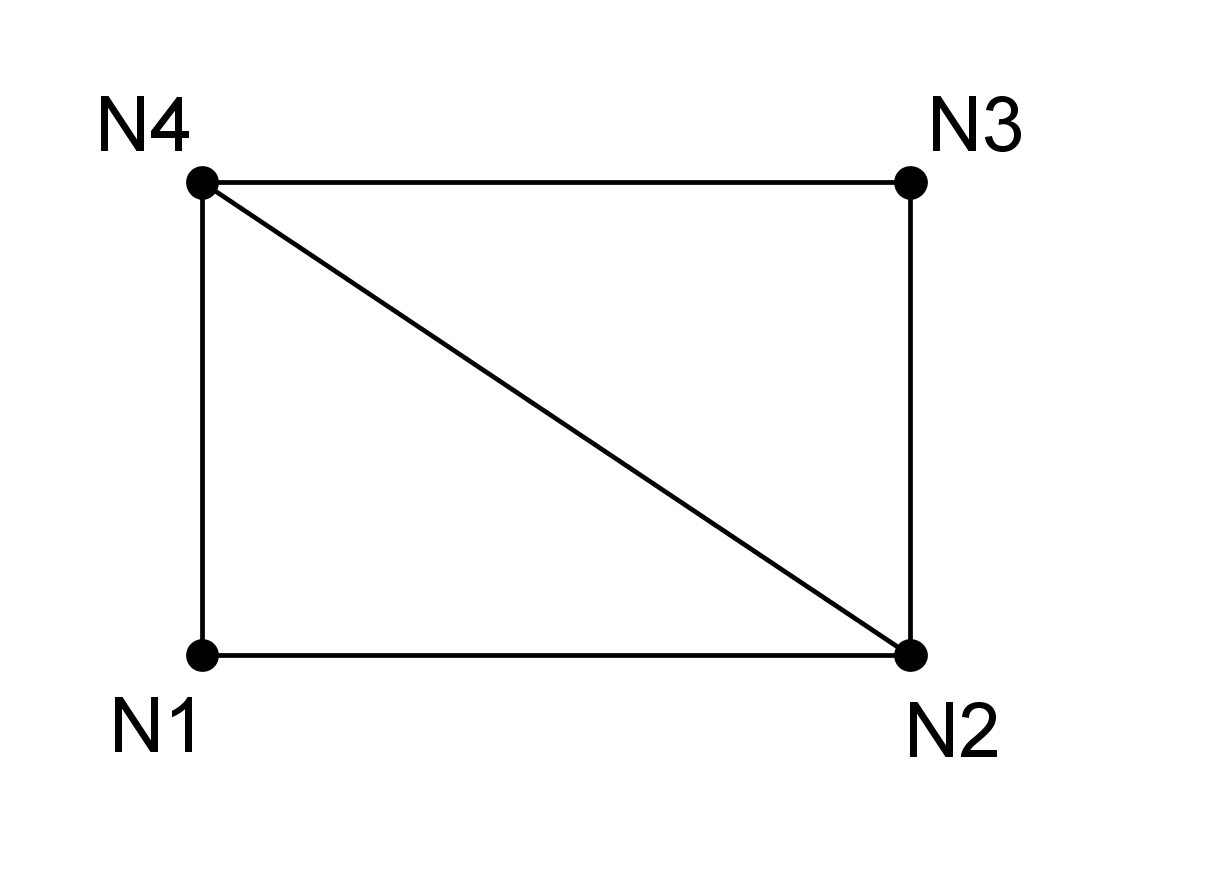

A method comparable to 3D cutting is used for 2D cutting. The quadrangles will be subdivided into triangles that are themselves subdivided according to the passage of the crack.

We cut the quadrangles into 2 triangles:

Figure 3.3.7-1 : Subdivision of a quadrangle into triangles: linear (left), quadratic (right)

quadrangles |

triangles |

\(\mathit{N1}\mathit{N2}\mathit{N3}\mathit{N4}\) |

\(\mathit{N1}\mathit{N2}\mathit{N4}\) |

\(\mathit{N2}\mathit{N3}\mathit{N4}\) |

|

\(\mathit{N1}\mathit{N2}\mathit{N3}\mathit{N4}\mathit{N5}\mathit{N6}\mathit{N7}\mathit{N8}\) |

\(\mathit{N1}\mathit{N2}\mathit{N4}\mathit{N5}\mathit{N9}\mathit{N8}\) |